A comparative analysis of Zoom Video SDK

Zoom Video SDK Performance Report

- 01 Overview - Jumplink to Overview

- 02 Assessing Video SDK Quality - Jumplink to Assessing Video SDK Quality

- 03 Performance Results and Analysis - Jumplink to Performance Results and Analysis

- 04 Quality of Performance - Jumplink to Quality of Performance

- 05 Resource Management Under Unideal Network Conditions - Jumplink to Resource Management Under Unideal Network Conditions

- 06 CPU/RAM Usage - Jumplink to CPU/RAM Usage

- 07 Conclusion - Jumplink to Conclusion

- 08 Appendix - Jumplink to Appendix

TestDevLab — a software quality assurance and custom testing tool development provider — conducted an analysis of the Zoom Video SDK and four other Video SDK vendors: Agora, Vonage TokBox, Chime, and Twilio. The goal was to understand the behavior of each platform and the resulting quality of each Video SDK. This analysis was commissioned by Zoom Communications, Inc. The findings provided in this report reflect TestDevLab testing results from May 12, 2022.

This report first describes considerations when assessing Video SDK quality. Then, an analysis of the results is presented, specifically looking at the quality of performance, perseverance of bandwidth, and keeping central processing unit (CPU) and random-access memory (RAM) usage low during a 25% packet loss. Details regarding the testing environment are provided in the appendix.

Designed to be easy to use, lightweight, and highly customizable, Zoom has put a lot of effort into the overall quality of its Video SDK. Even in poor network scenarios, mobile use cases, and simulated rural or remote locations, the Zoom Video SDK test results were strong.

TestDevLab also executed testing to see how the Video SDKs handled limited resources such as bandwidth, CPU, and RAM. The Zoom Video SDK continued to perform well.

TestDevLab helps startups and Fortune 500 companies worldwide accelerate release cycles, improve product quality, and enhance user experiences. As part of its services and solutions, TestDevLab offers innovative audio/video quality testing and benchmarking, functional, regression, security, and integration testing as well as test automation services for SDKs, following best practices and using industry-standard testing tools and custom testing solutions.

When assessing Video SDK quality, there are many different aspects that need to be considered, including:

User Devices: TestDevLab’s scope was to test the same devices on all SDKs to ensure that they can be compared.

Network Limitations: In order to conduct a comparative analysis, network conditions have to be controllable. TestDevLab focused on four network limitations including unlimited, limited bandwidth applied to the sender, limited bandwidth to the receiver, and random 25% packet loss. Each device is connected to a different router to ensure a quality connection.

Predictability & Repeatability: TestDevLab executed eight tests split into test runs of four tests. Each test was done at different times to reduce the impact of any potential global network congestion/unexpected service slowdowns, etc. From those tests, TestDevLab took five tests with the most stable behavior across them.

Analysis: In order to analyze the results, TestDevLab executed an in-process validation. They look through the results over time for all the tests as well as spot-checking videos to confirm the data validity compared to a subjective look.

TestDevLab conducted the tests for each scenario multiple times. In each test and for all vendors, TestDevLab saw stable results across the same scenario when executed multiple times. When analyzing the results, TestDevLab looked at:

Quality of Performance. TestDevLab analyzed the quality of audio delay and video delay under various network conditions. They also looked at frame-rate comparison, frames per second (FPS), and Video Multimethod Assessment Fusion (VMAF).

Resource Management Under Unideal Network Conditions. TestDevLab looked at how vendors managed resources under a packet loss situation.

CPU/RAM Usage. TestDevLab looked at how vendors consume resources while an application is under stress, e.g. many participants’ video are rendered in a gallery view.

Quality of performance is important across a variety of network conditions. TestDevLab tested the audio delay, video delay and frame-rates with an unlimited network.

The test of audio delay across vendors found that there was a comparable delay across the board, with the exception of Chime which performed with a slightly longer delay.

When comparing video delay, Zoom, Agora, Twilio, and Chime have a video delay mostly below 250ms. Vonage TokBox, however, had video delays ranging from 250ms upwards of 1,000ms.

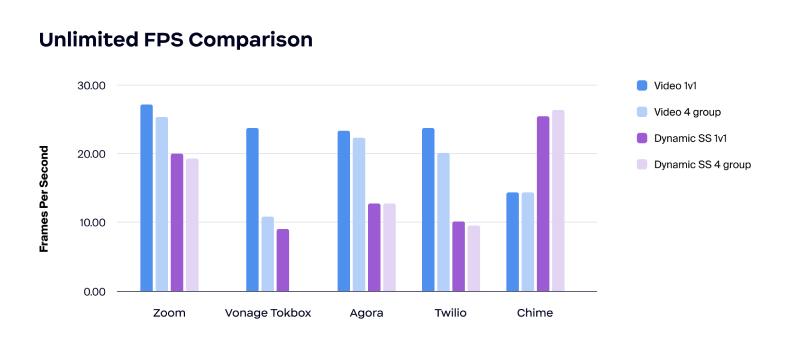

In comparing frame-rates, the test found that Zoom has the highest frame rate on video calls.

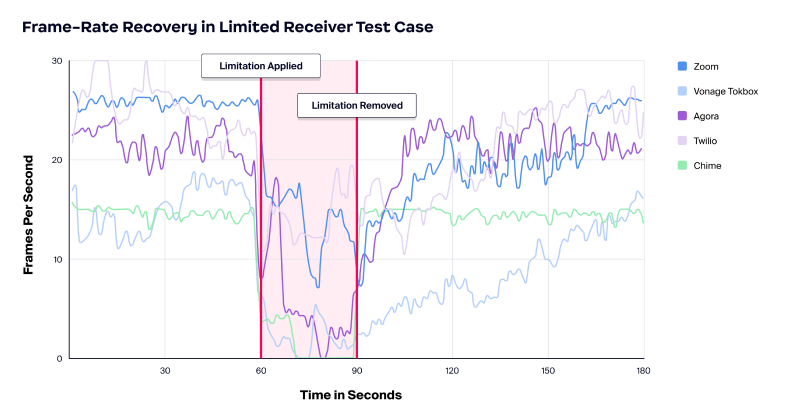

The results also showed that Zoom had the most consistent video quality in all tested network conditions. The test started with no bandwidth restrictions, then a low bandwidth restriction was applied equally to all vendors, first on the send side, then applied on the receiver side.

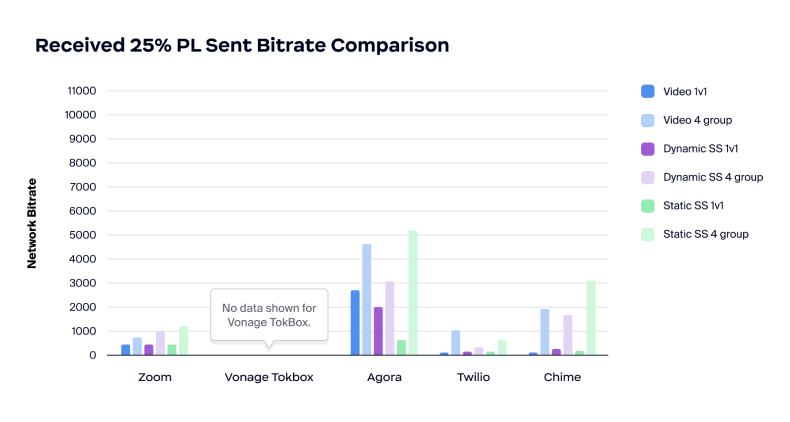

Next, TestDevLab looked at the perseverance of sources during a 25% packet loss scenario. Packet loss can slow down network speeds, cause bottlenecks, interrupt your network throughput bandwidth, and can be expensive. Packet loss can be caused for a variety of reasons, and many of them are unintentional. Network congestion, unreliable networks, especially mobile, software bugs, and overloaded devices are a few examples.

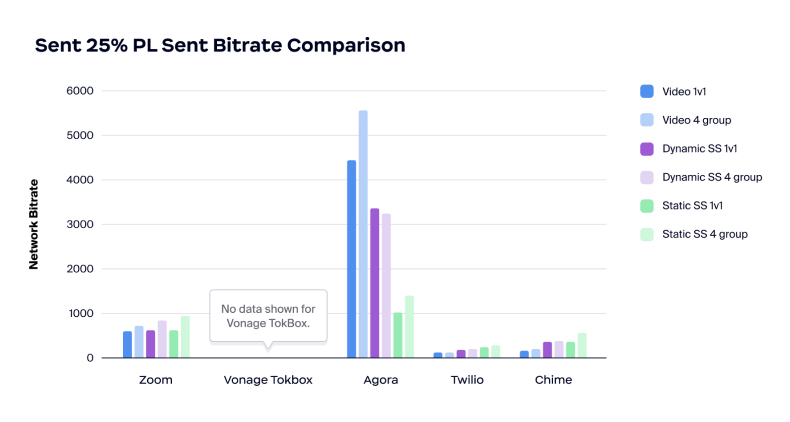

In the tests, which included a 25% packet loss, Zoom performed well in preserving bandwidth, and keeping CPU and memory usage low in packet loss and constrained network conditions. Zoom provides smart management and is conservative while maintaining call quality.

On the other hand, the testing showed that Agora seems to have a different approach to packet loss – spending a lot of bandwidth to try and handle the packet loss. If the limited bitrate is the cause of packet loss, then trying to consume more bandwidth can cause issues.

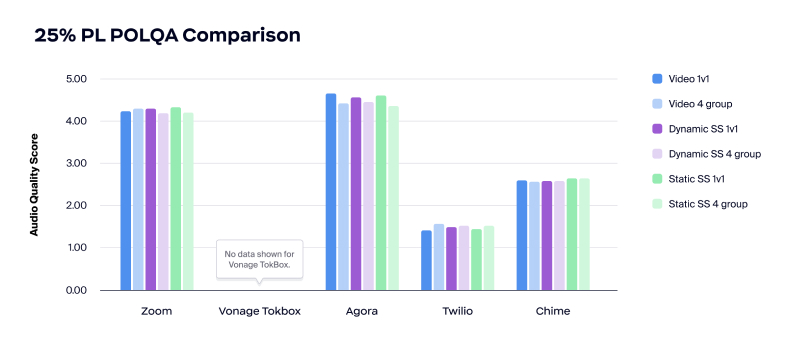

In comparing audio quality during a 25% packet loss, Zoom and Agora handled audio quality well, with levels above 4.00 MOS. However, Twilio’s audio quality was unusable and Chime’s quality was close to unusable, with levels below 3.00 MOS.

When looking at audio delay during 25% packet loss, Zoom increased approximately 100 ms compared to Agora, which had a more significant increase of 200 – 250 ms to handle packet loss.

During the Network Bitrate comparison, the test showed that both Twilio and Chime were unstable and defaulted to very low bitrates. On the other hand, Agora’s bitrate was very high demonstrating that the product may not account for a congested network when attributing the cause of packet loss.

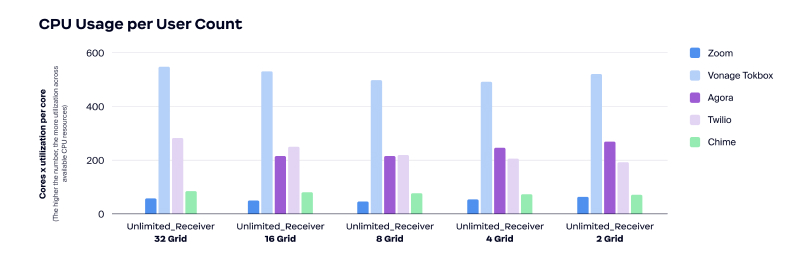

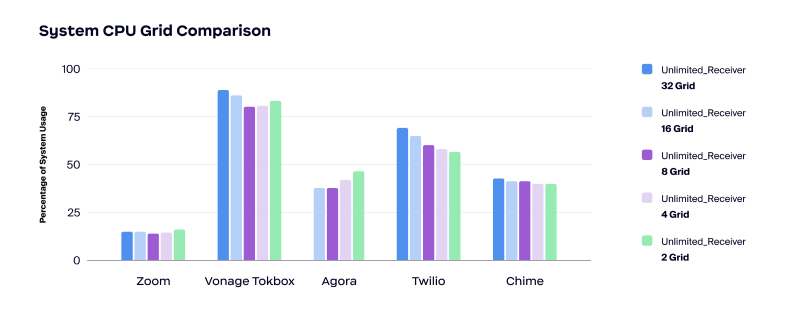

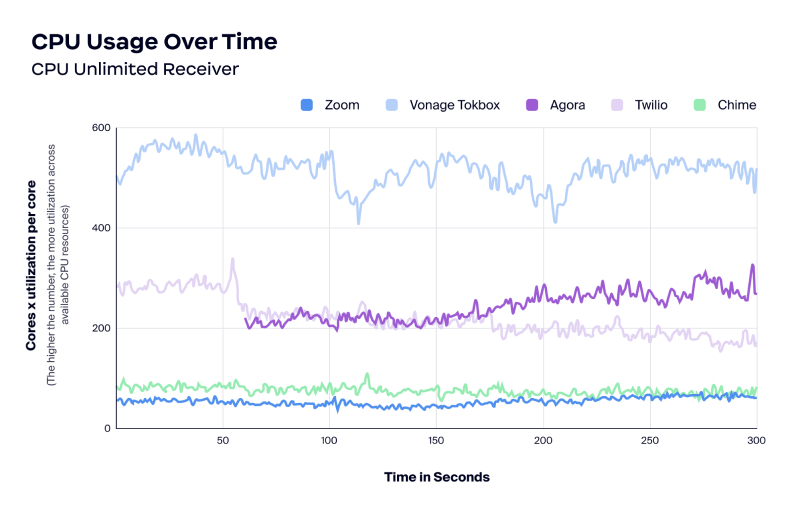

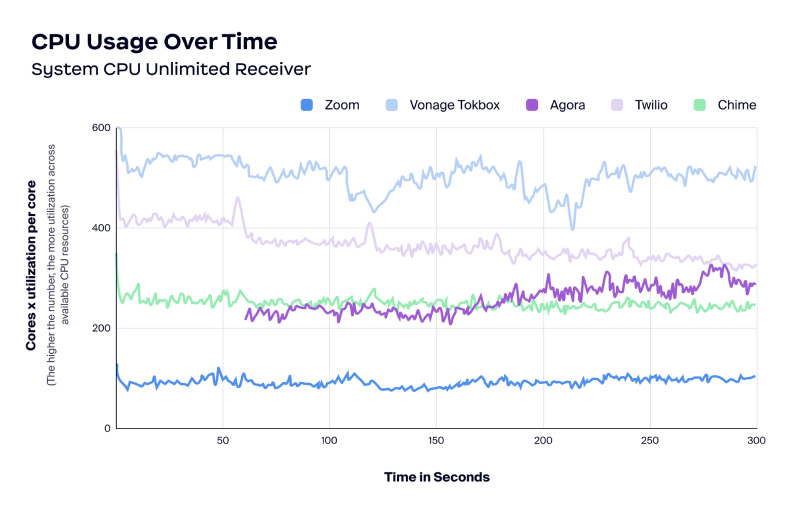

As far as CPU usage, Zoom used the least amount of CPU compared to the four other vendors across the testing scenarios.

Zoom also had the lowest RAM usage. As shown in the below chart, Twilio and Chime both used around 500MB of RAM during a 25% packet loss, and Agora used 3GB+ on video calls.

Benefits of lower CPU and RAM usage include:

- Better user experience

- Better app performance with more resources available

- Less complaints of app burning through battery

- Allows the user to run other applications alongside a video conference

A lower CPU and RAM usage is the perfect use case of embedded real-time A/V into other resource-consumption-heavy applications like video games, and graphical collaboration applications like CAD and 3D design.

TestDevLab examined CPU usage per user count, CPU usage over time, and memory usage over time. During the testing, the results showed that the Zoom Video SDK used a low CPU. As mentioned above, lower CPU usage can translate to a good user experience, better app performance with more resources available, and fewer complaints of app burning through battery.

During the same tests, Agora was unable to host a 32-grid gallery view. In addition, Vonage TokBox consistently used more CPU than the other vendors.

The Zoom Video SDK is a good option for all network scenarios, including those with limited resources such as bandwidth, CPU, and RAM.

TestDevLab’s tests were conducted for each scenario multiple times and the results were consistent each time. The Zoom Video SDK stood out as a result of its:

- Quality of performance

- Bandwidth reliability

- CPU/RAM usage

Learn how to accelerate your development and build fully customizable video-based applications by visiting the Zoom video SDK page.

Test Environment

The Video SDKs, including the Zoom Video SDK, and those from Agora, Vonage TokBox, Chime, and Twilio, were tested in a set of predefined scenarios.

TestDevLab tested all five vendors, over three test types, with two different amounts of participants and four different network limitations including unlimited, applied restriction on the sender, applied restriction on the receiver, and 25% packet loss. TestDevLab ran eight tests split into four test runs done at different times. From there, TestDevLab took the five tests with the most stable behavior across them to produce the analysis and results.

In order to test the CPU and RAM usage under different load levels, TestDevLab created a stress test that starts with a total of 48 users on the call. While the video was streaming, TestDevLab switched the number of users on the grid every 60 seconds, in order to test the 32, 16, 8*, 4, and 2 users scenarios.

For the performance testing, the TestDevLab platform was configured the following way:

- Sender device: MSI Katana GF66 11UD i7-11800H, 8GB, 512GB SSD, GeForce RTX 3050 Ti 4GB

- Receiver device: Lenovo ThinkPad E495|R5 3500U|16GB|512SSD|Vega 8 (20NE-001GMH)

- Video call resolution: 1080×720

- Screen-share resolution: 1920×1080 (Screen resolution)

- Video frame-rate: 30 FPS

- Video bitrate: 3000 kbps

TestDevLab conducted video call, dynamic screen-share, and static screen-share test scenarios for the performance analysis. Each scenario was tested five times with different numbers of participants. The following process was applied:

- Apply network limitation

- Create a call with sender device

- Join the call with receiver device and extra participants

- Start streaming video or screen-sharing

- In parallel – gather raw test data

- When the video ends, leave the call in the reverse of joining order

- Process the raw data

- Validate the processed data

* TestDevLab testing design also included an 8-participant gallery test, however, the implementation of this test used an incorrect resolution and those results are not included in the analysis and report.