Zoom Phone Audio Quality Report 2025

Audio quality is at the core of every phone call. When clarity is reduced, or when audio is delayed or drops entirely, the experience is degraded, leading to frustration. To understand how Zoom Phone compares with other leading cloud telephony solutions, Zoom commissioned TestDevLab to conduct an independent evaluation. Test scenarios included clean and impaired networks, congestion events, packet loss, and noisy environments across desktop and mobile platforms. The following providers were evaluated:

- Zoom Phone

- Microsoft Teams Phone

- Cisco Webex Calling

- RingCentral RingEX

- 8x8 Business Phone

- Dialpad Connect

The findings in this report reflect TestDevLab’s testing results from July 2025.

About TestDevLab

TestDevLab (TDL) offers software testing & quality assurance services for mobile, web, and desktop applications and custom testing tool development. They specialize in software testing and quality assurance, developing advanced custom solutions for security, battery, and data usage testing of mobile apps. They also offer video and audio quality assessment for VoIP or communications apps and products for automated backend API, as well as web load and performance testing.

TestDevLab team comprises 500+ highly experienced engineers who focus on software testing and development. The majority of their test engineers hold International Software Testing Qualifications Board (ISTQB) certifications, offering a high level of expertise in software testing according to industry standards and service quality.

- Platforms:

- macOS Sequoia 15.5 (MacBook Pro M1 2020)

- Android 16 (Pixel 6a, Pixel 7)

- iOS 18.5 (iPhone 14, iPhone 14 Pro Max)

- Applications tested: Zoom Phone, Microsoft Teams Phone, Cisco Webex Calling, RingCentral RingEX, 8x8 Business Phone, Dialpad Connect

- Repetitions: 5 tests per scenario

- Total sessions: 600 calls recorded and analyzed

Captured metrics

To provide a comprehensive view of call quality, TestDevLab (TDL) captured a range of metrics covering audio performance and network utilization. Data points were sampled continuously during each call and aggregated into a central dataset for analysis.

Audio performance metrics

- POLQA (Perceptual Objective Listening Quality Analysis): ITU standard for objective speech quality, widely used for benchmarking voice services.

- ViSQOL (Virtual Speech Quality Objective Listener): ML-based perceptual speech quality metric comparing transmitted audio against a reference signal.

- Audio Delay: End-to-end latency in audio transmission, measured in milliseconds.

Network metrics

- Sender bitrate: The amount of audio data transmitted per second.

- Receiver bitrate: The amount of audio data received per second.

Data collection methodology

- Audio metrics (e.g., POLQA, ViSQOL) were logged once every 12 seconds.

- All other metrics (DNSMOS, delay, bitrate) were captured at a rate of one data point per second, allowing fine-grained tracking of call behavior over time.

Not all metrics could be captured across all platforms. For example, CPU and memory data were collected on macOS but not on mobile clients. TDL maximized the set of metrics collected per platform while ensuring comparability across vendors.

To provide a comprehensive view of Zoom Phone performance compared to other cloud telephony solutions, TestDevLab (TDL) conducted tests across the most common call scenarios using representative desktop and mobile devices. Each test was run on current application versions, with controlled impairments introduced to measure how platforms adapt to varying conditions.

Test conditions – audio performance

To evaluate audio performance, calls were placed under a range of controlled network conditions designed to replicate real-world environments.

- Baseline

Stable, unconstrained broadband (~30 Mbps) with no added noise or impairments. - Changing packet loss

An eight-minute test introduced progressively worsening packet loss:- Minute 1: 0%

- Minute 2: 10%

- … increasing in 10% increments until Minute 8: 70%

This scenario simulated degrading network reliability and measured how each platform adapted to sustained impairment.

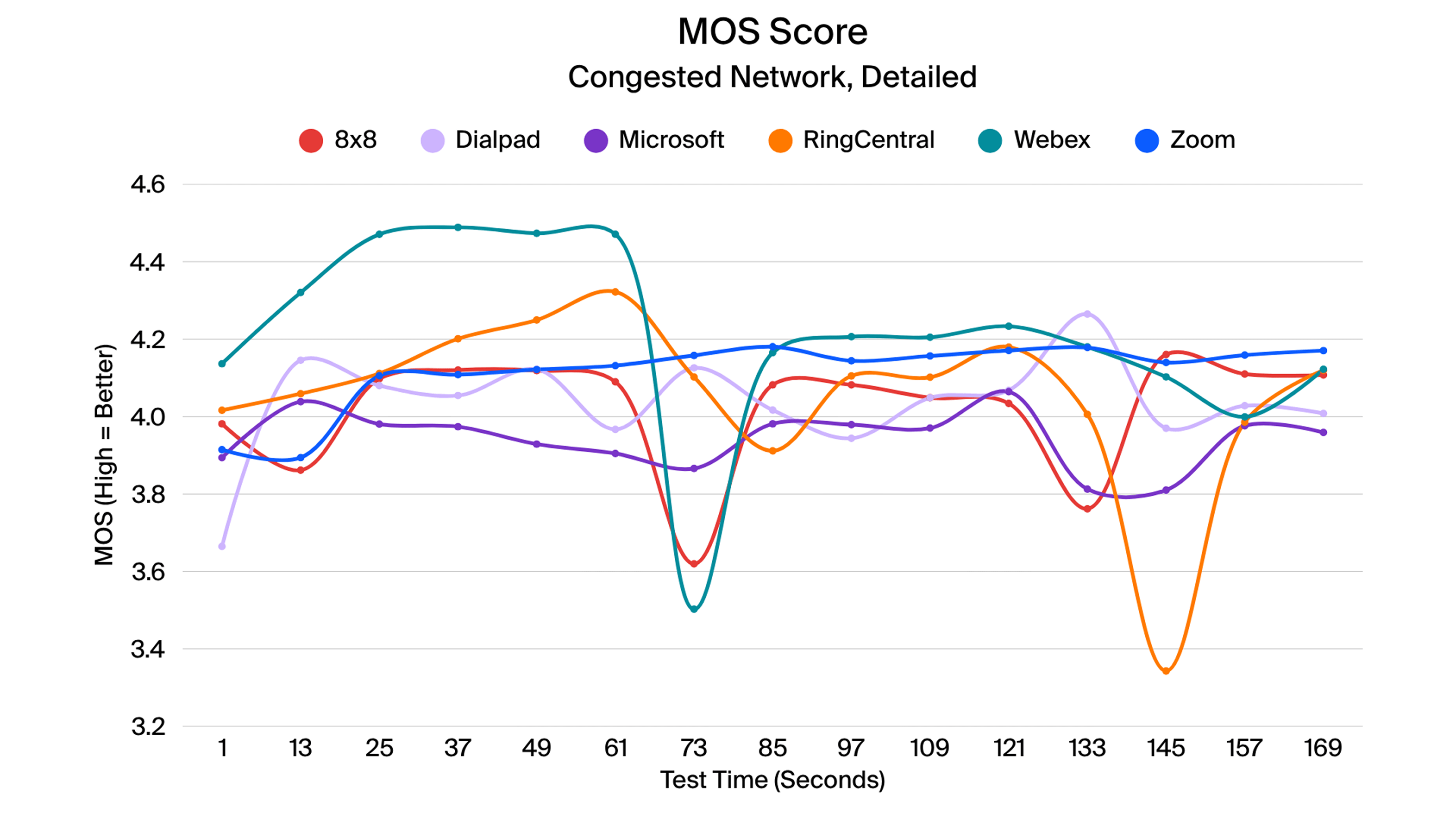

- Congested network

The device’s network was capped at 10 Mbps and shared with a second device generating scripted congestion. The script simulated ten concurrent users, each consuming 1 Mbps, with bursts increasing in duration:- At 00:10, a 10 × 1 Mbps burst for 5 seconds

- At 01:10, a 10 × 1 Mbps burst for 10 seconds

- At 02:10, a 10 × 1 Mbps burst for 20 seconds

Audio samples

- For each condition, a reference audio sample was played repeatedly to ensure consistency.

- Each scenario included 15 repetitions, except for the changing packet loss test, which included 40 repetitions to capture performance at each loss level.

Test setup & data capture process

Testing followed a standardized process to ensure repeatability across all scenarios.

Test steps

- Apply network limitation, if required by the scenario.

- Open the conferencing application.

- Initiate a call from the sender device to the receiver device.

- Receiver mutes their microphone to isolate sender audio.

- Begin the test sequence.

- Start network impairment and capture scripts.

- End the test sequence.

- Both users disconnect from the call.

Data capture

- Network traffic from the test device was captured on separate monitoring networks.

- Audio output was recorded using an external script-based capture device.

- Recorded audio was compared with the original source audio after the test to assess quality.

Across all platforms and scenarios, Zoom consistently delivers leading audio performance, with clear advantages under network stress conditions. Key takeaways from the study include:

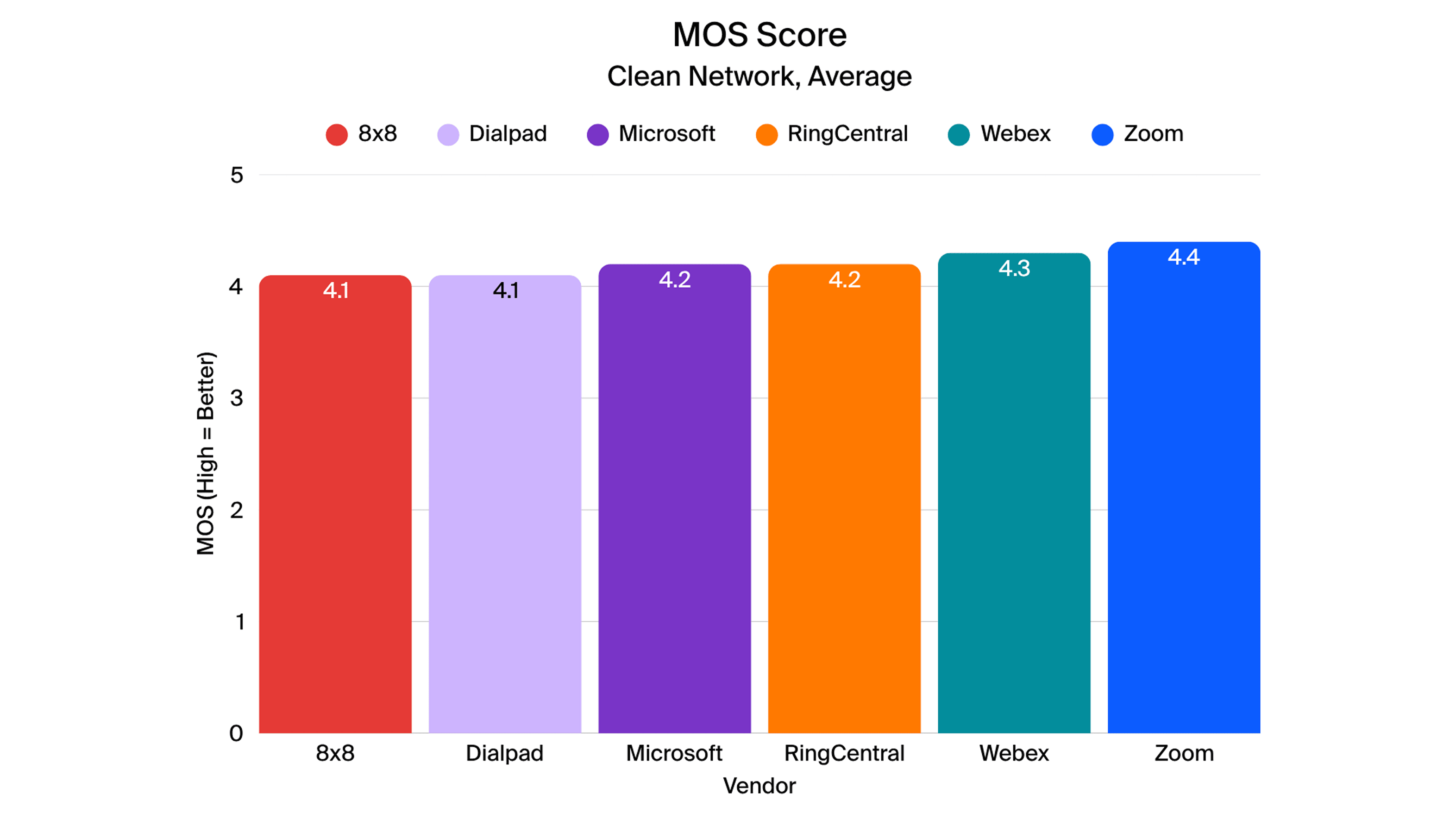

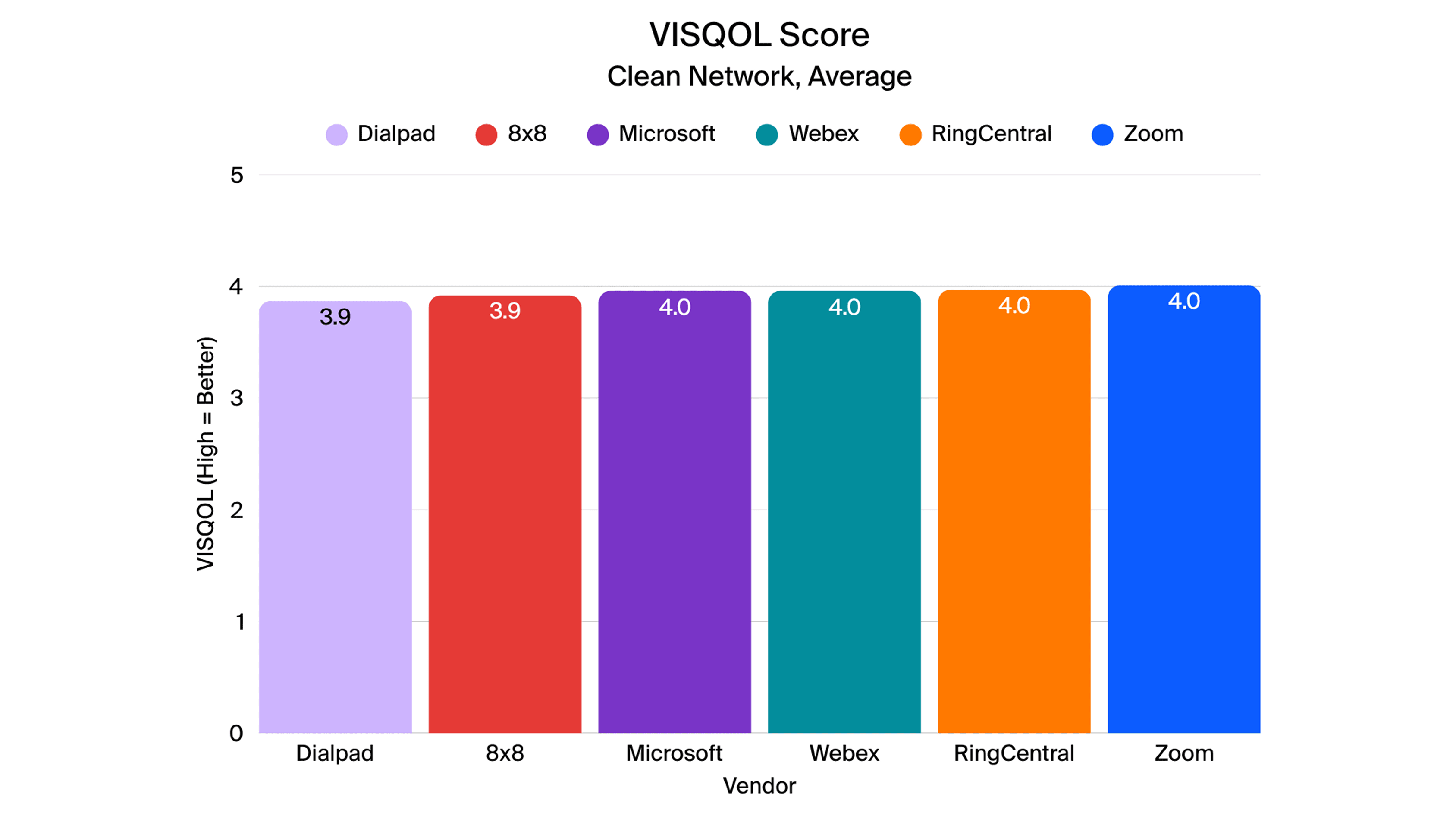

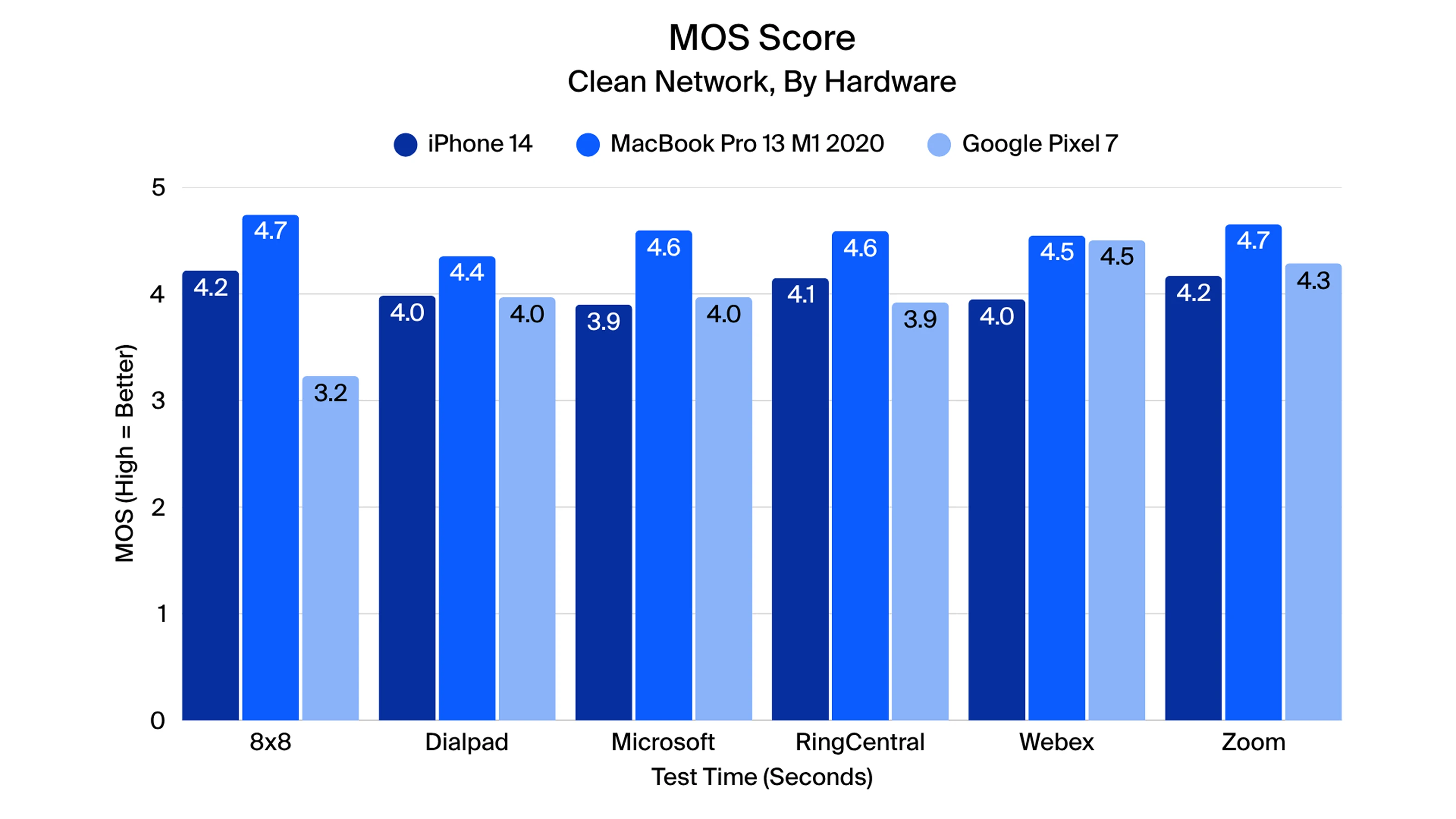

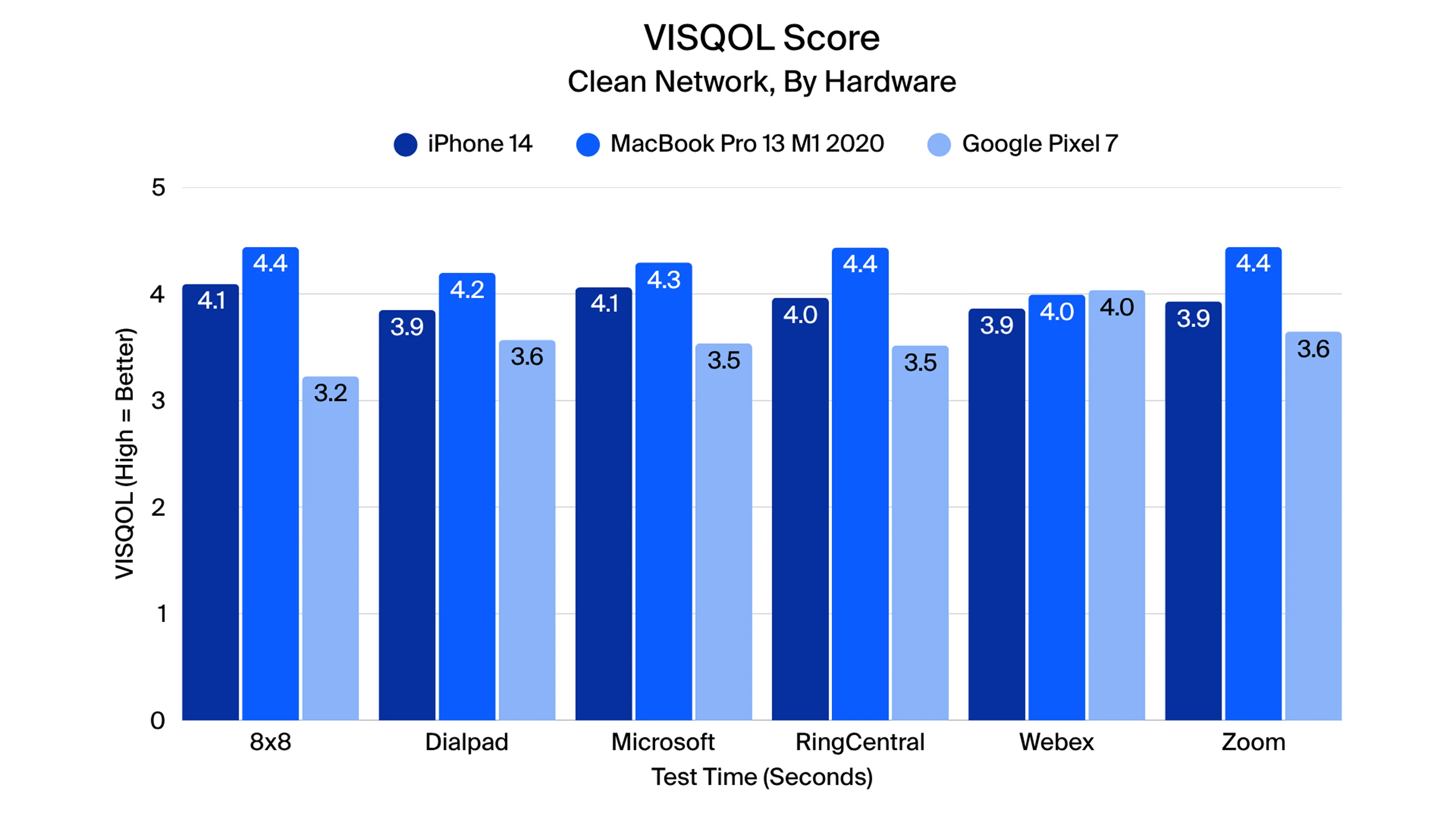

- Superior performance on clean networks

- On stable networks, Zoom achieves the best overall audio quality, with top MOS and VISQOL scores. All platforms provided good audio quality, scoring at least 4.0 in these scenarios.

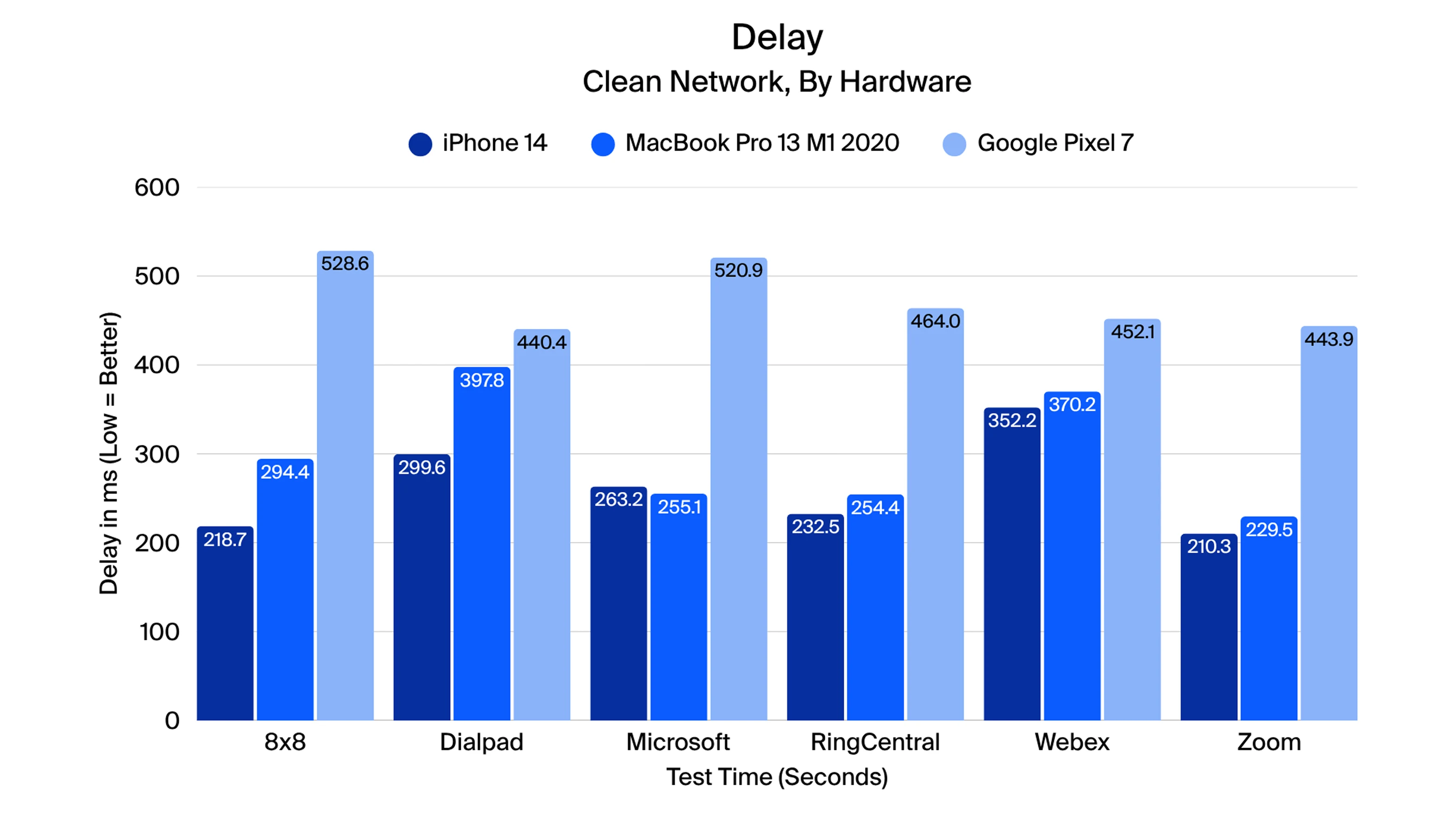

- Zoom also provides the lowest end-to-end audio delay among all tested applications.

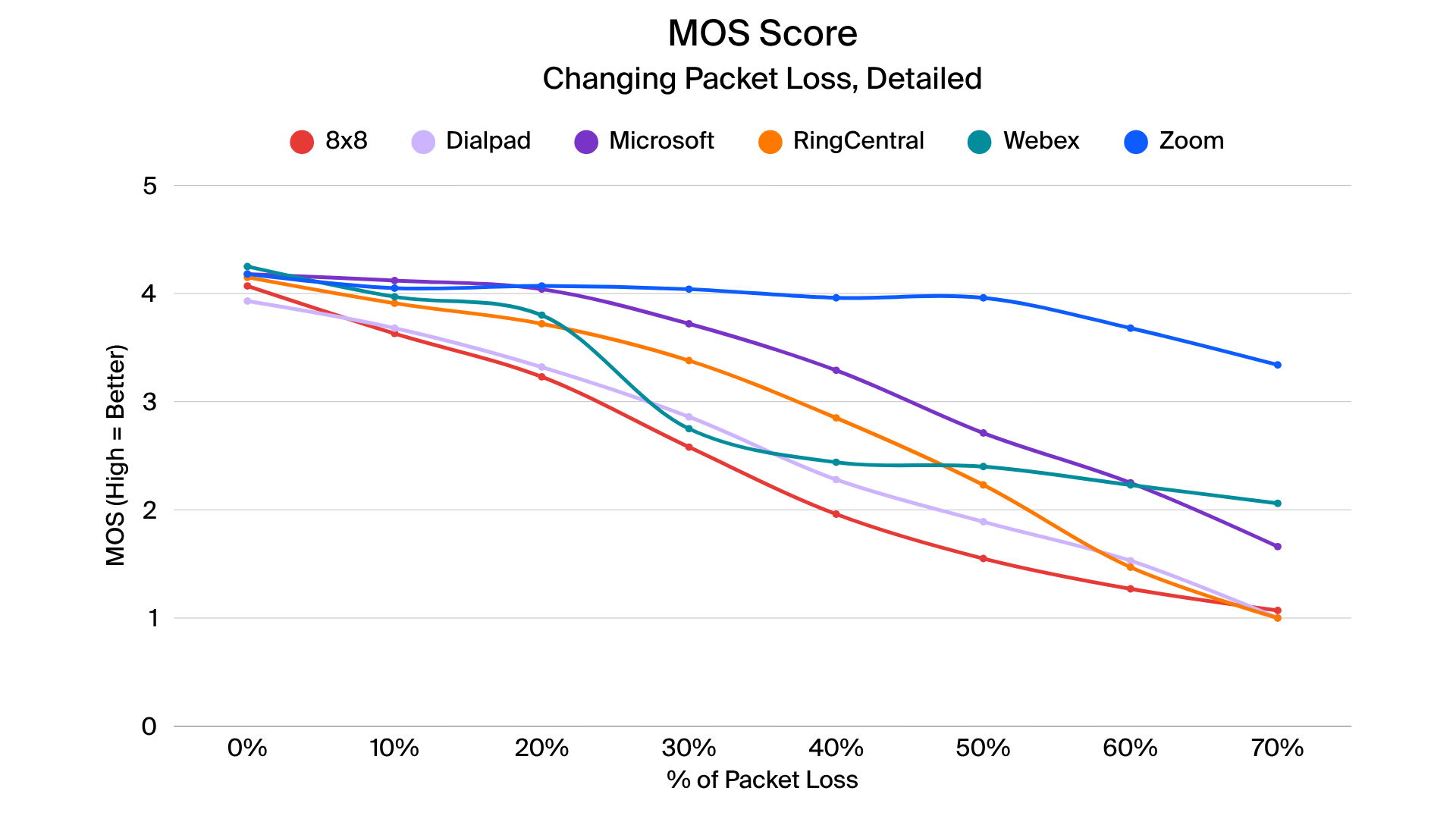

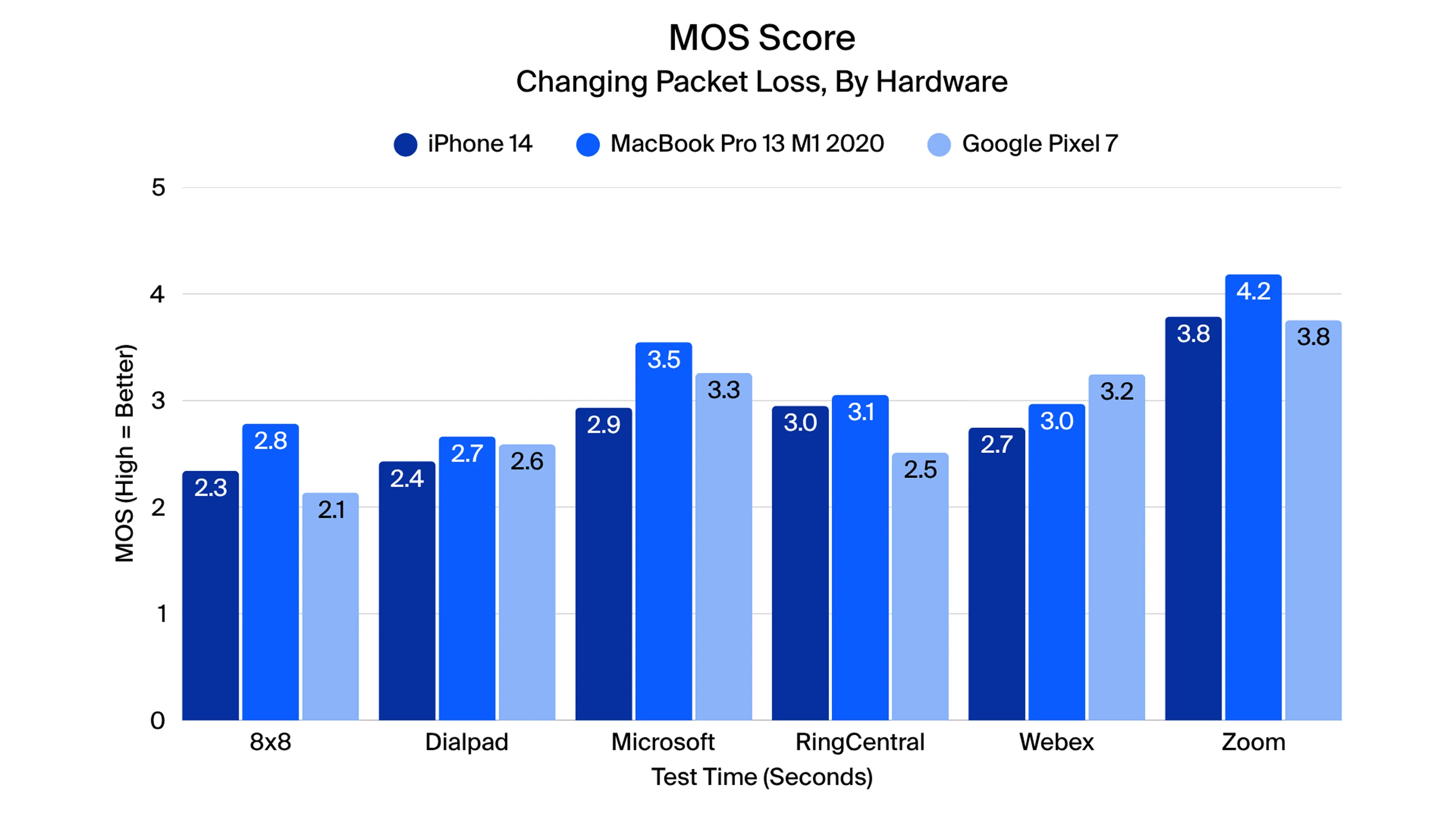

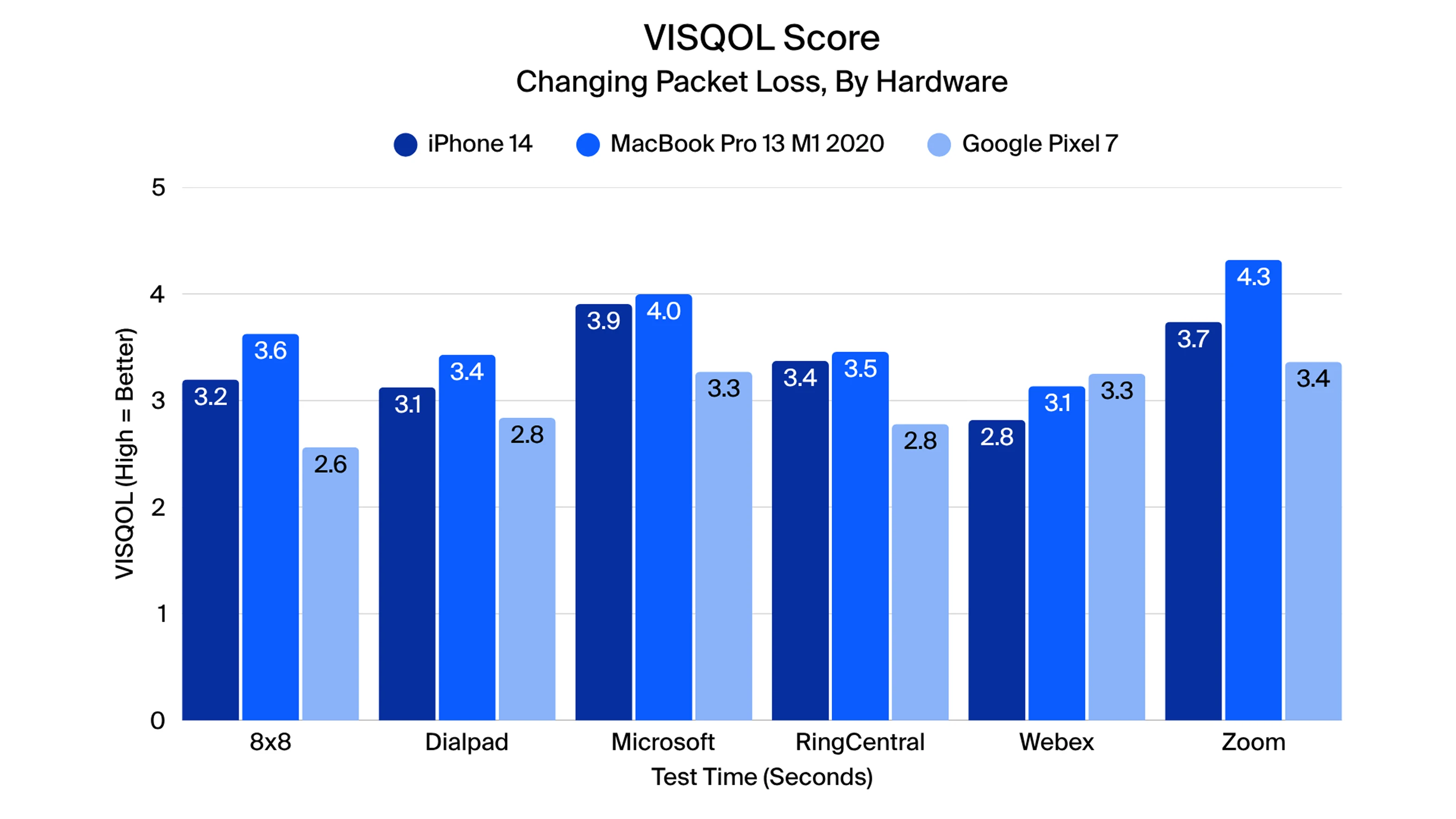

- Resilient audio under packet loss

- Zoom maintains high audio quality even as packet loss increases, with noticeable degradation only above 50 percent loss.

- At 70 percent packet loss, Zoom retains a MOS score of 3.3, outperforming all competitors. Zoom does that by dynamically adjusting bandwidth during packet loss to preserve call quality.

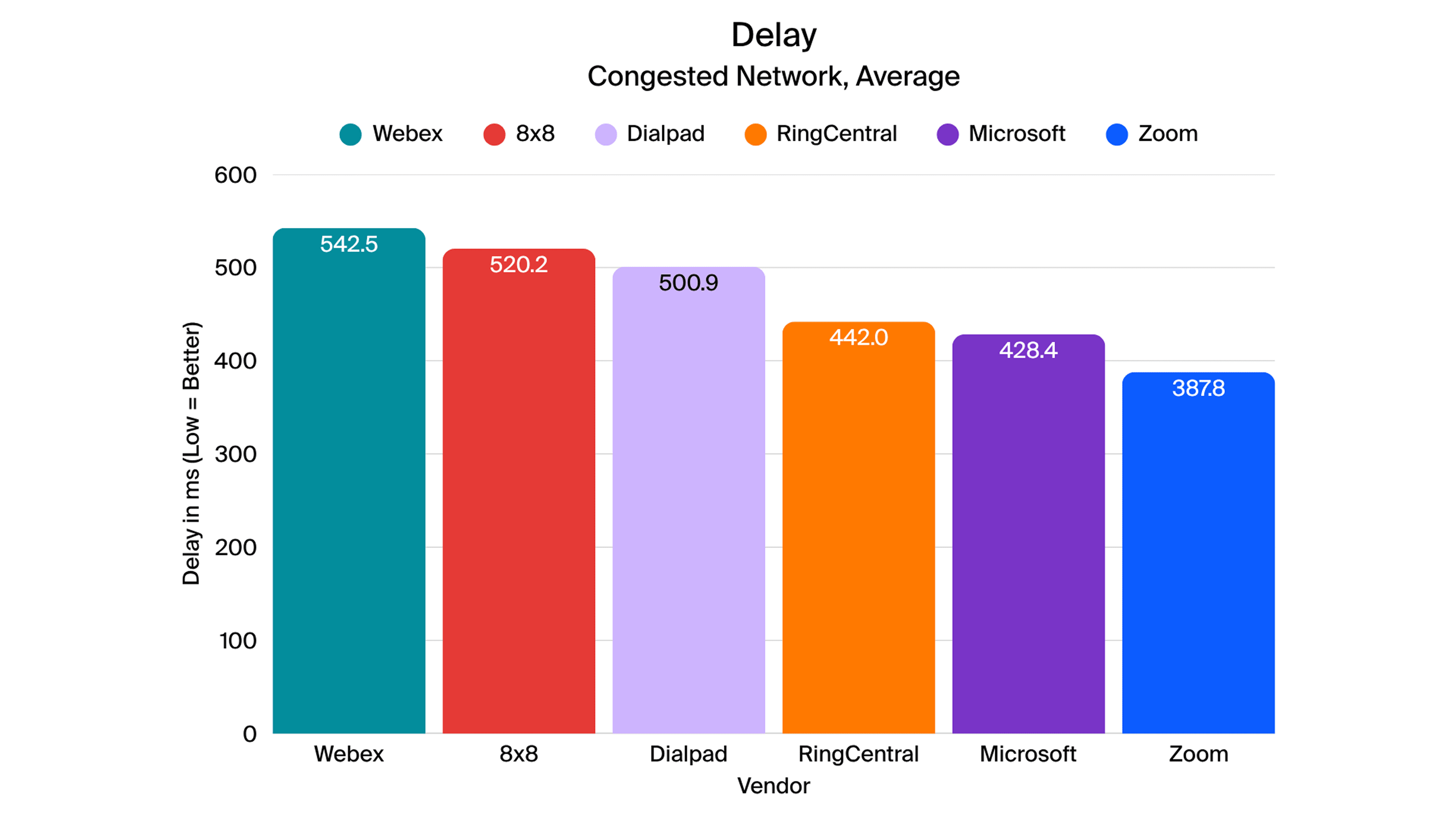

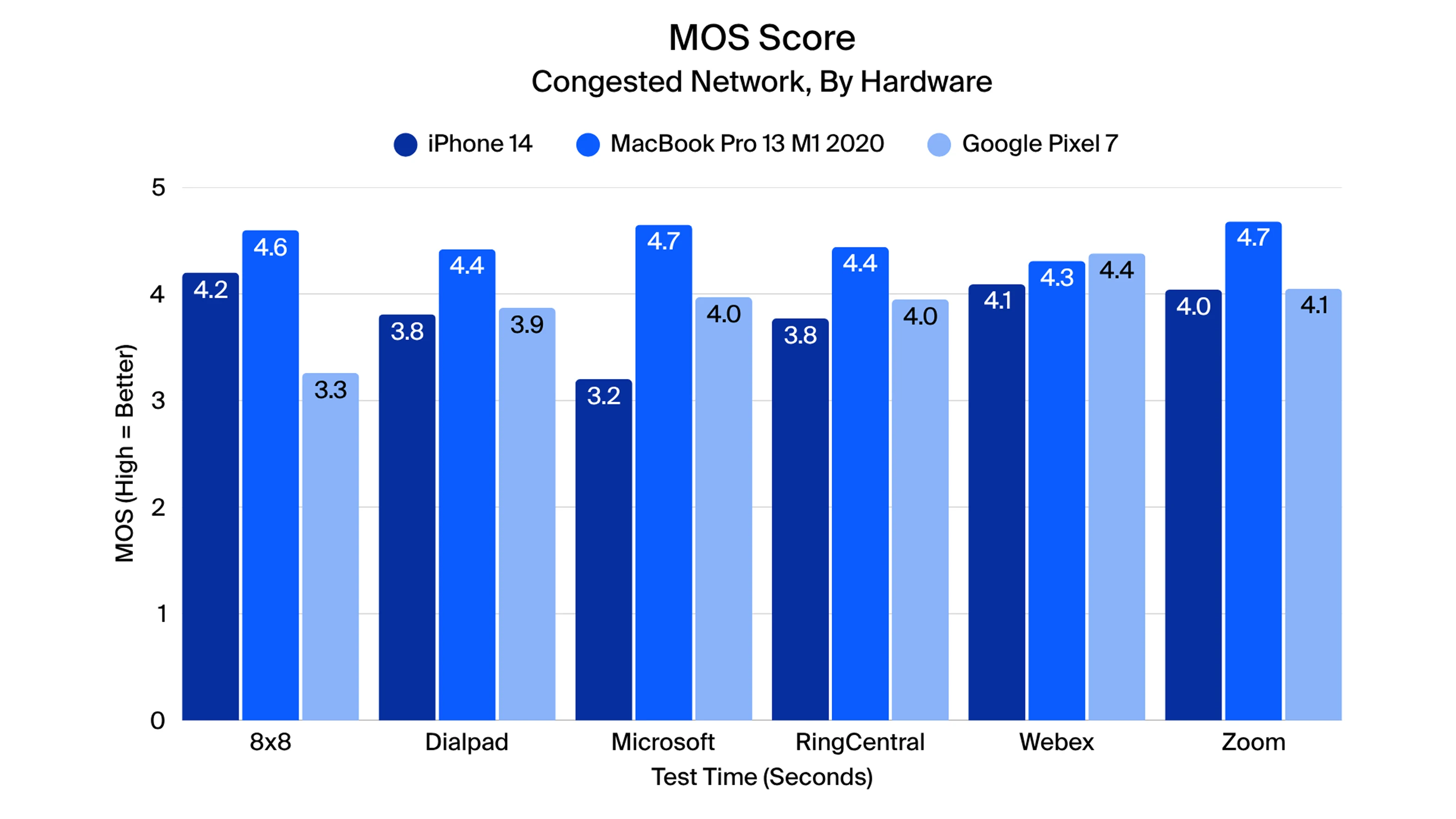

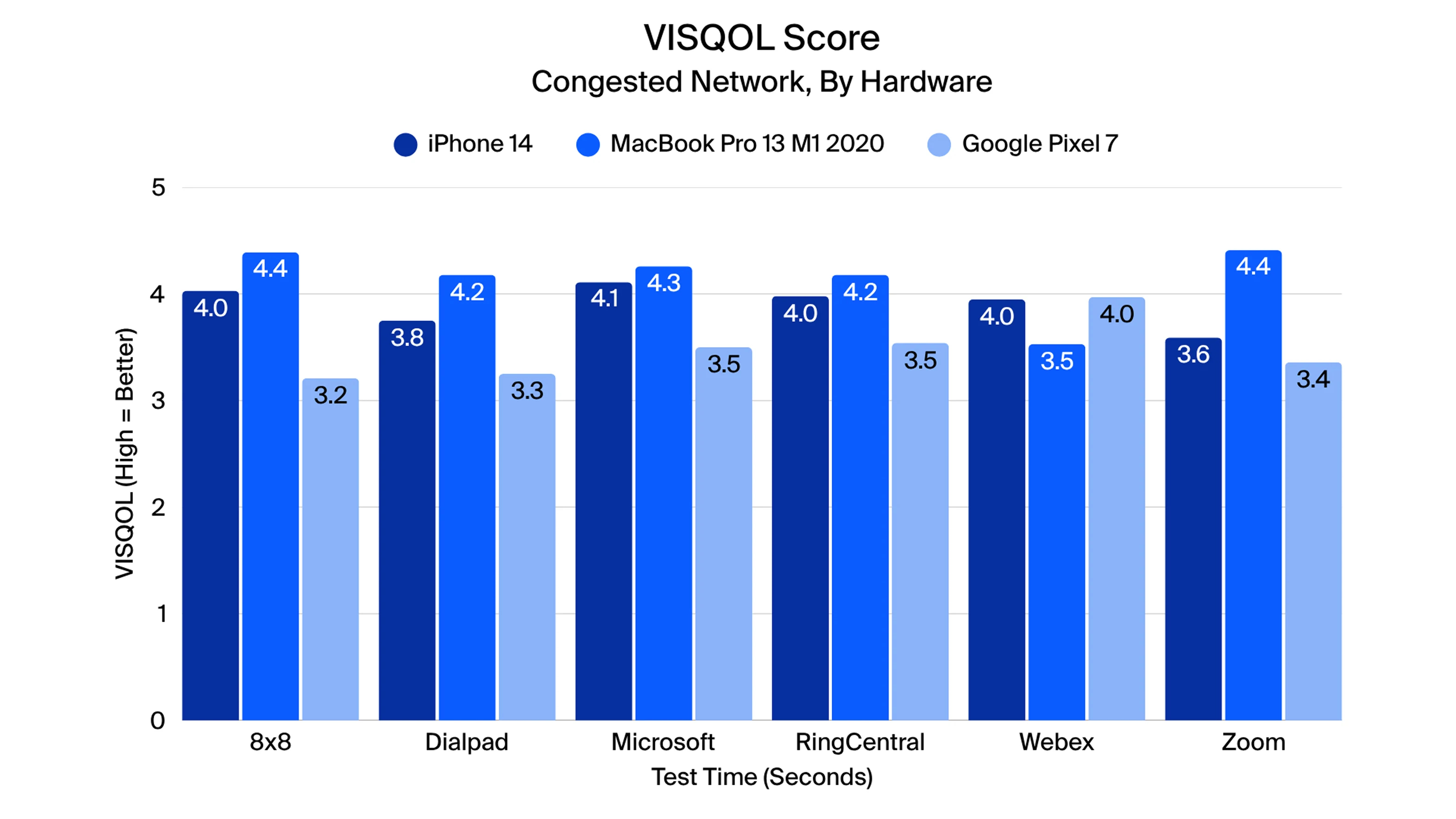

3. Stable quality under congested conditions

-

- During network congestion, Zoom preserves consistent audio quality while maintaining low latency.

- Zoom outperforms competitors across all platforms evaluated in the study, balancing clarity and speed even under heavy network load.

Audio performance

Baseline

macOS

All applications demonstrated excellent audio quality under stable network conditions. Zoom led with the lowest audio delay, making it one of the most responsive platforms for macOS users. VISQOL results generally aligned with POLQA, although Webex showed slightly lower VISQOL scores. Network usage across apps was largely consistent, with minor variations: 8x8 and Dialpad used slightly less bandwith on the sender side, while Webex used slightly more bandwith.

Android

Zoom and Webex maintained strong audio quality, though audio delay was higher for both than on macOS. Zoom remained among the top performers, and VISQOL results closely followed POLQA trends. Network consumption patterns mirrored those seen on macOS.

iOS

Zoom again demonstrated excellent audio quality with the lowest delay, confirming consistent performance across the Apple devices used for testing. Other apps also performed well, and network usage was similar to other platforms.

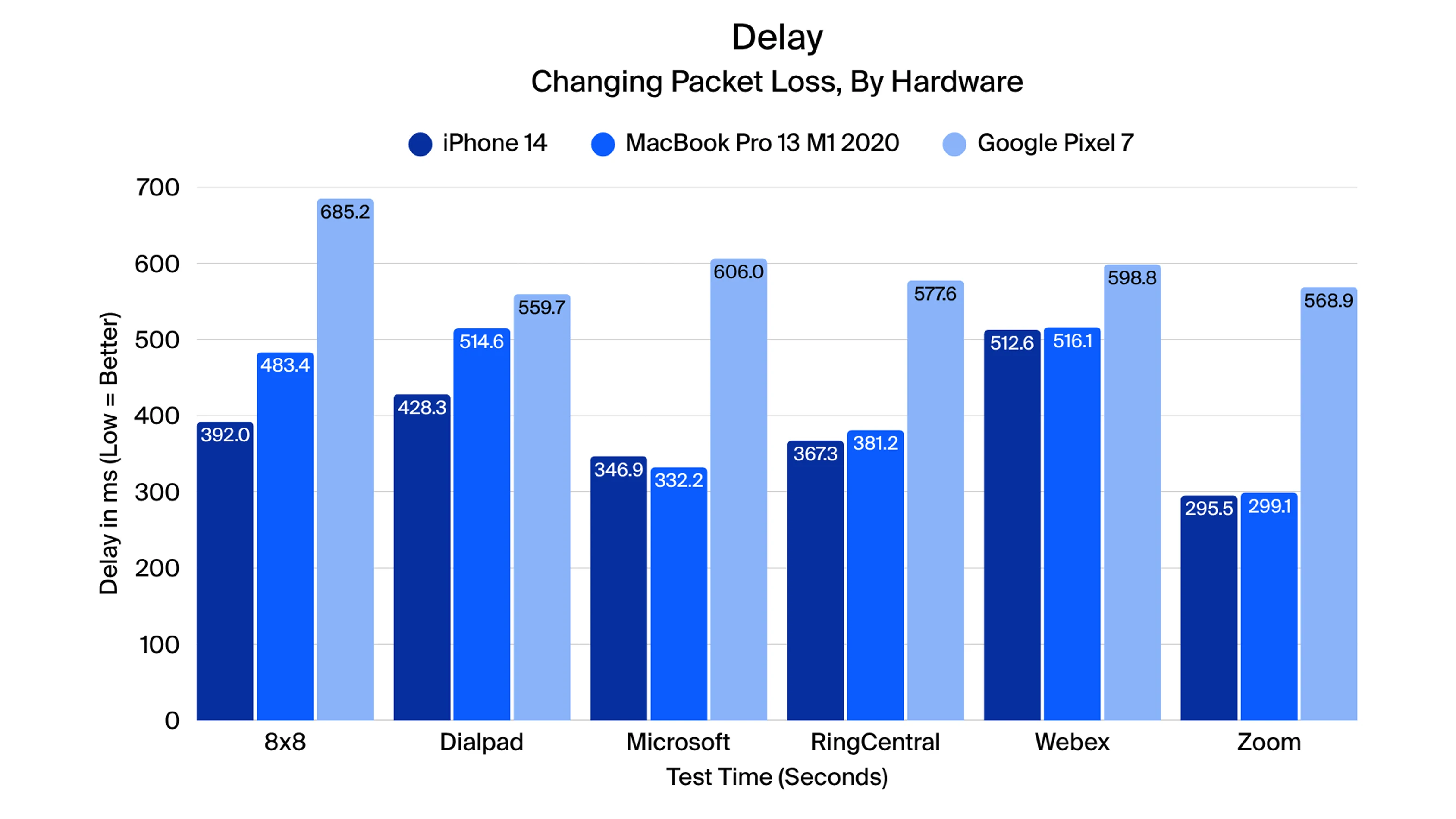

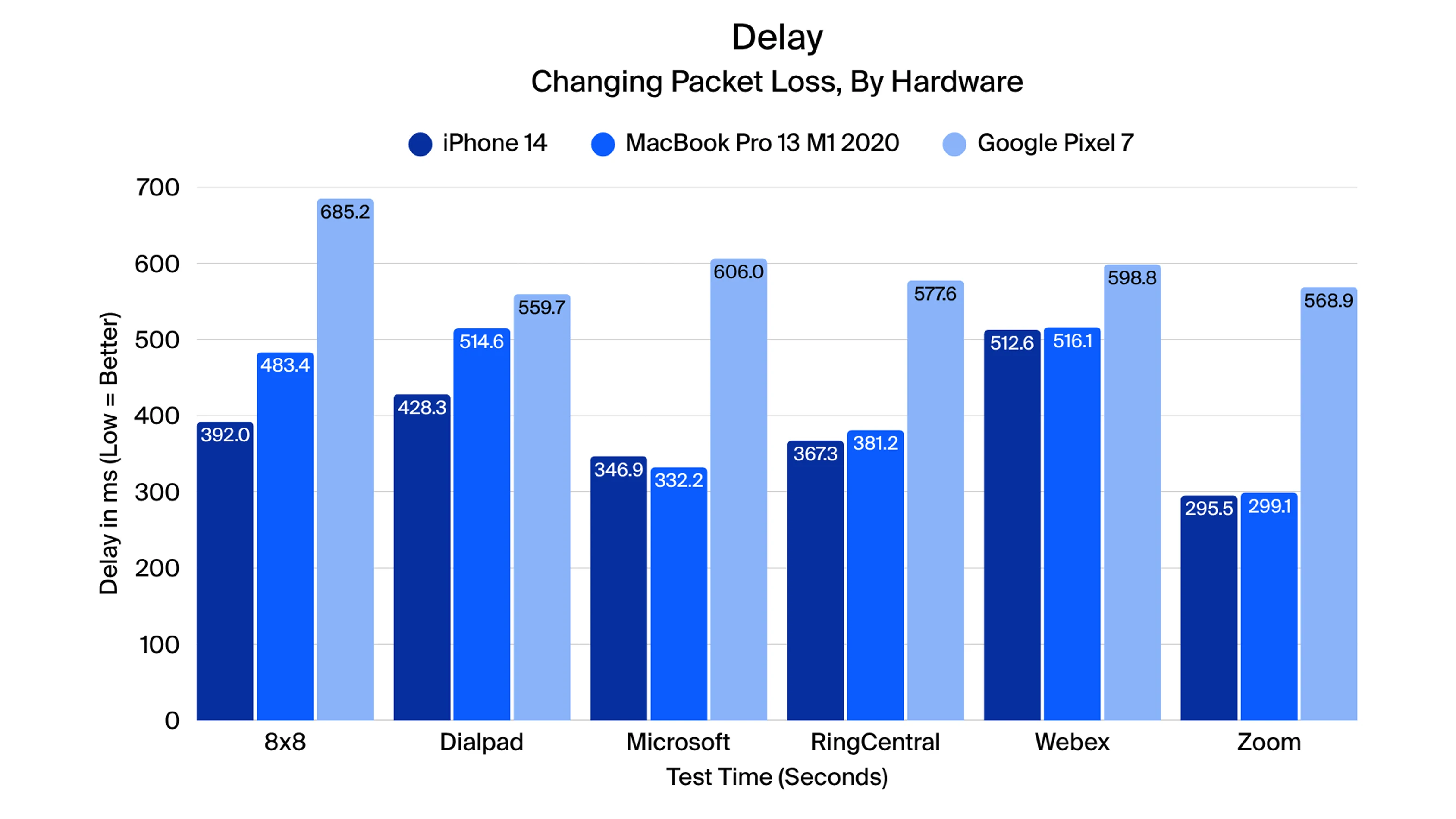

Changing packet loss

macOS

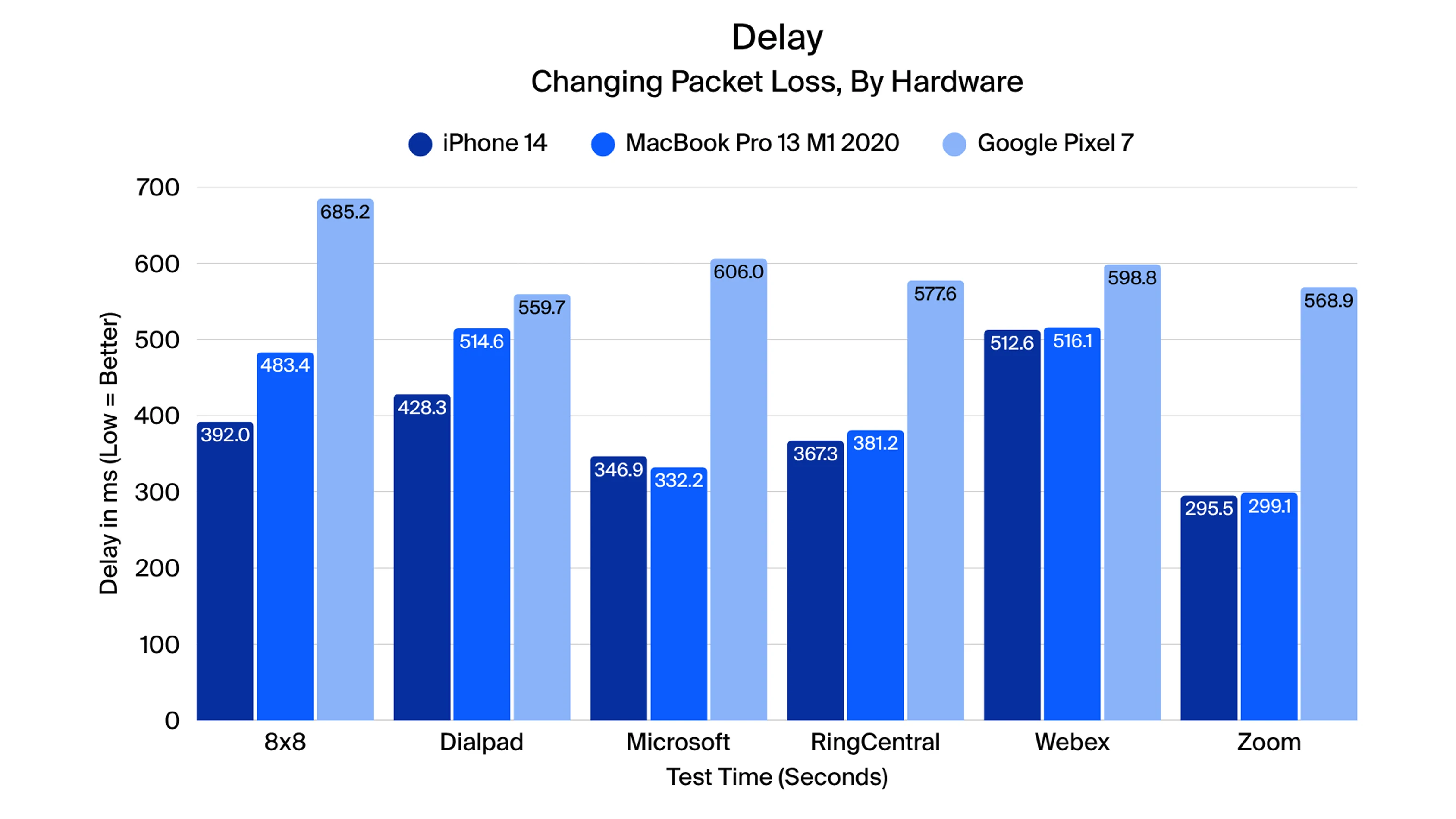

During increasing packet loss, Zoom retained strong audio quality, with only a slight increase in audio delay as network conditions worsened. MS Teams introduced buffering at 10% packet loss, significantly increasing end-to-end delay. VISQOL results correlated with POLQA, reinforcing the trend that Zoom maintained superior performance.

Android

Zoom preserved high-audio quality throughout the scenario. While other apps occasionally had lower audio delay, this came at the cost of noticeably reduced audio quality. Zoom dynamically increased receiver-side network usage under high packet loss to maintain stable call performance.

iOS

Zoom maintained the best overall performance. Audio delay increased modestly with rising packet loss, while other apps like MS Teams experienced sample gaps despite VISQOL metrics suggesting otherwise.

Congested network

macOS

Zoom excelled in maintaining stable audio quality and low delay during periods of network congestion. Webex and 8x8 were most affected, showing notable dips in quality, while other platforms maintained consistent performance.

Android

Zoom again preserved call quality under congestion. Webex and RingCentral showed reduced audio quality, though audio delay spikes were similar across all apps.

iOS

Zoom maintained quality, while Webex and RingCentral were the most impacted. Overall, Zoom demonstrated the lowest end-to-end audio delay among competitors in congested conditions.

Across all tested platforms and scenarios, Zoom consistently demonstrated strong audio performance and reliable call quality. Under baseline conditions, Zoom delivered some of the lowest end-to-end audio delay while maintaining excellent POLQA and VISQOL scores. During challenging network conditions, including increasing packet loss and congested networks, Zoom outperformed the competitors included in the study by preserving audio quality and minimizing delay, demonstrating efficient handling of network impairments.

Overall, Zoom’s performance highlights a combination of stable audio quality, low latency, and resilient handling of network and environmental challenges.

Key strengths:

- Consistently low audio delay across platforms, especially under baseline and congested network conditions

- Superior audio quality retention during packet loss scenarios

Zoom’s performance demonstrates its ability to deliver a seamless high-quality audio experience even in adverse network conditions, making it a strong choice for professional and enterprise communications.