Audio & Video SDK for Web Report 2025

When developers embed real-time communication into their applications, SDK quality determines whether users experience seamless, reliable interactions or face frustrating disruptions. Unlike standalone meeting apps, SDKs must deliver consistent audio, video, and data performance across varied devices, networks, and integration scenarios—all while staying lightweight and developer-friendly. This report evaluates the SDK quality of leading vendors to understand how each performs under real-world conditions and where meaningful differences emerge:

- Agora Video Calling SDK

- Twilio Video API

- Vonage Video API

- Zoom SDK for Web (versions 1.X and 2.X)

About TestDevLab

TestDevLab (TDL) offers software testing & quality assurance services for mobile, web, and desktop applications and custom testing tool development. They specialize in software testing and quality assurance, developing advanced custom solutions for security, battery, and data usage testing of mobile apps. They also offer video and audio quality assessment for VoIP or communications apps and products for automated backend API, as well as web load and performance testing.

TestDevLab team comprises 500+ highly experienced engineers who focus on software testing and development. The majority of their test engineers hold International Software Testing Qualifications Board (ISTQB) certifications, offering a high level of expertise in software testing according to industry standards and service quality.

To ensure a fair and repeatable comparison across vendors, tests were conducted under controlled conditions that reflect realistic application scenarios. Each SDK was evaluated with the same set of devices, network limitations, and playback materials to isolate differences in SDK performance rather than environmental factors.

Vendors and versions tested

All products tested were at their latest releases as of July 2025.

- Zoom 1.X (1.12.17)

- Zoom 2.X (2.2.0)

- Twilio (2.30.0)

- Vonage (2.21.2)

- Agora (4.23.2)

Platforms and devices

Tests were performed across a consistent hardware set to reflect both desktop and mobile scenarios:

- Sender: Windows 11 Pro / MacBook Pro M1

- Receiver: Windows 11 Pro / MacBook Pro M1

- Extra user: macOS M1

- Additional device: Lenovo ThinkPad T14 Gen (Intel Core i5-1335U, 16GB RAM)

Network conditions tested

Each SDK was stressed across varying network constraints to capture quality under both ideal and degraded conditions:

- Baseline: network with no limitations

- Bandwidth: Unlimited → 1 Mbps → 500 Kbps → Unlimited

- Packet loss: 5% → 10% → 20% → 40%

Test procedure

Each scenario followed the same step-by-step process to ensure consistency across vendors:

- Sender creates the room

- Receiver, third, and fourth users join the room (amount of users based on meeting type)

- Sender restarts video playback

- Capture and network limitation scripts are started

- Test ends when the sent video reaches the blue screen

- Capture scripts are stopped

- All users disconnect from the call

This approach allowed testers to gather synchronized video, audio, and performance data from each session.

Data capture and metrics

To build a complete picture of SDK performance, multiple data streams were captured during each test:

- Network traces collected on the router to measure real conditions

- Receiver video recorded directly from the receiver device using a capture card

- Performance data logged from the test devices themselves

- Delay video recorded on a separate device to measure latency

- Audio captured independently for reference and verification

Test settings

Tests were standardized across platforms to minimize external variables:

- Receiver view: Dominant speaker view, with an extra user visible

- Receiver resolution:

- 1920×1080 on macOS

- 2560×1440 on Windows

- Audio setup: Audio cards with analog audio cables

- Video capture: Elgato Camlink HDMI into a separate MacBook

- Delay capture: QuickTime Player with Logi Tune configuration

- System setup: Auto-updates disabled on all devices to avoid interruptions

Evaluation approach

The evaluation focused on two primary quality attributes:

- Visual quality (sharpness, color fidelity, motion smoothness, absence of artifacts)

- Video fluidity (minimizing freezes and stuttering)

To provide a complete picture of SDK quality, TestDevLab used two complementary evaluation methods:

- Automated evaluation: capturing objective metrics such as audio quality (POLQA), video quality (VMAF, VQTDL), bitrate, and resource usage

- Manual evaluation: human raters reviewed receiver-side recordings and scored perceived quality using the Absolute Category Rating (ACR) 1–5 scale

All test sessions were prepared as receiver-side recordings with anonymized filenames to prevent bias. Videos were cropped to exclude UI elements and scaled to 720p for consistency:

- Desktop tests: 16:9 aspect ratio, scaled to 720p (downscale for 2U, upscale for 4U)

- Mobile tests: 9:16 aspect ratio, scaled to 720p portrait (downscale for 2U, upscale for 4U). Some SDKs were excluded at the 500 kbps limit when performance collapsed into complete freezes.

Participants and environment

To approximate a natural user experience, tests were viewed on calibrated devices (iPhone 14 for mobile, MacBook Pro for desktop) in neutral lighting. Ten participants with varying visual acuity, technical expertise, and viewing habits provided independent evaluations.

Scoring methodology

Quality was measured using Absolute Category Rating (ACR), where each video was rated independently on a 5-point scale:

- 5 – Excellent: Flawless, impairments imperceptible

- 4 – Good: Minor impairments, visible only on close inspection

- 3 – Fair: Noticeable impairments, somewhat annoying

- 2 – Poor: Significant impairments, disruptive to experience

- 1 – Bad: Severe impairments, unacceptable experience

This combination of controlled testing, diverse participant feedback, and standardized scoring provided a robust foundation for comparing SDK quality across vendors.

Testing across varied conditions highlighted several scenarios where Zoom’s SDKs clearly outperformed competitors. These results underscore Zoom’s ability to deliver resilient, high-quality experiences under challenging network environments.

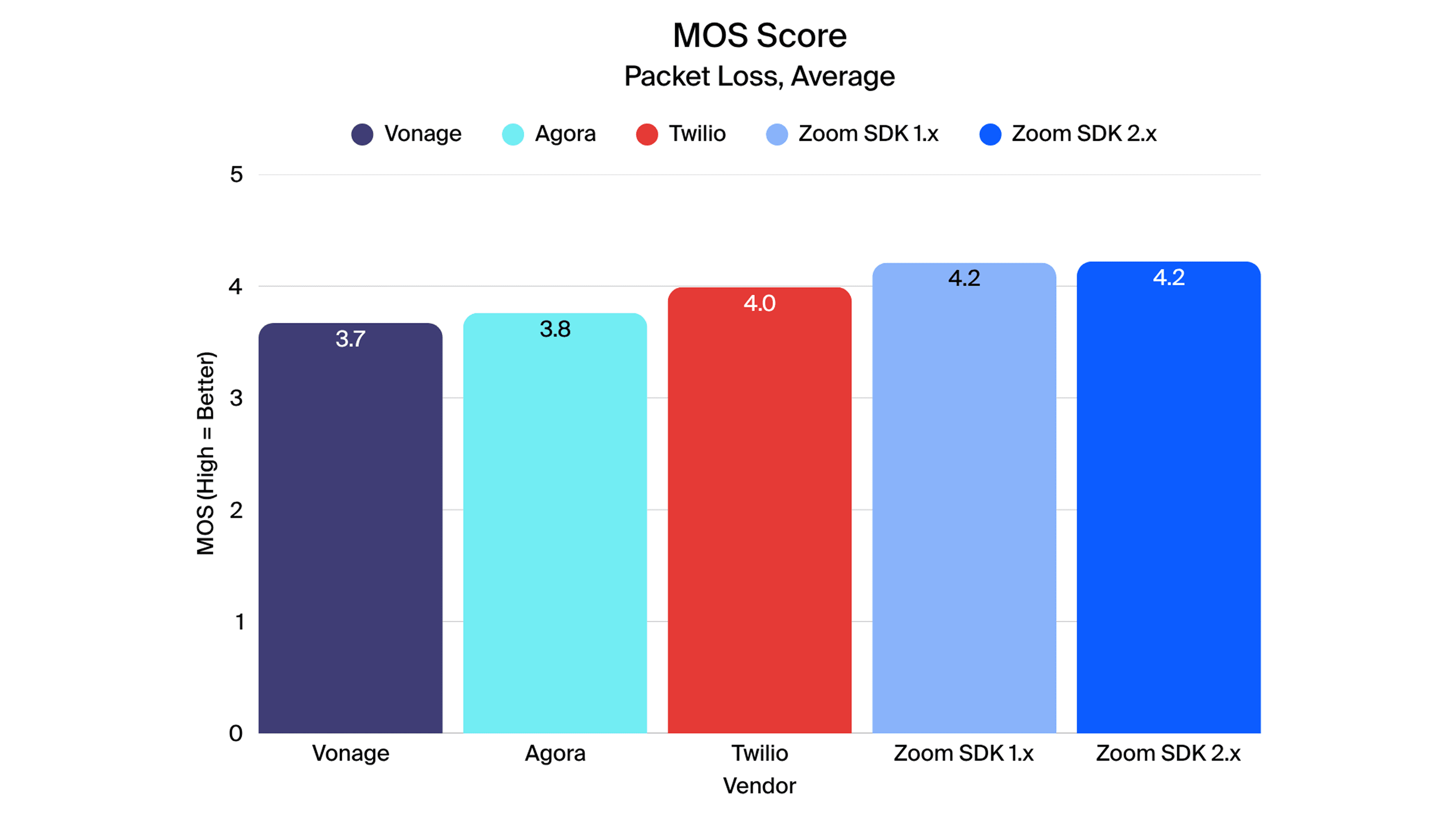

- Audio quality under packet loss

Zoom consistently delivered the highest POLQA scores, maintaining intelligibility and clarity when other SDKs degraded.- Up to 56% better audio quality depending on network conditions

- Maintained POLQA scores above the ~3.5 threshold where speech degradation becomes noticeable

- Up to 4.4x lower audio delay than competitors in imperfect network conditions (e.g., mobile)

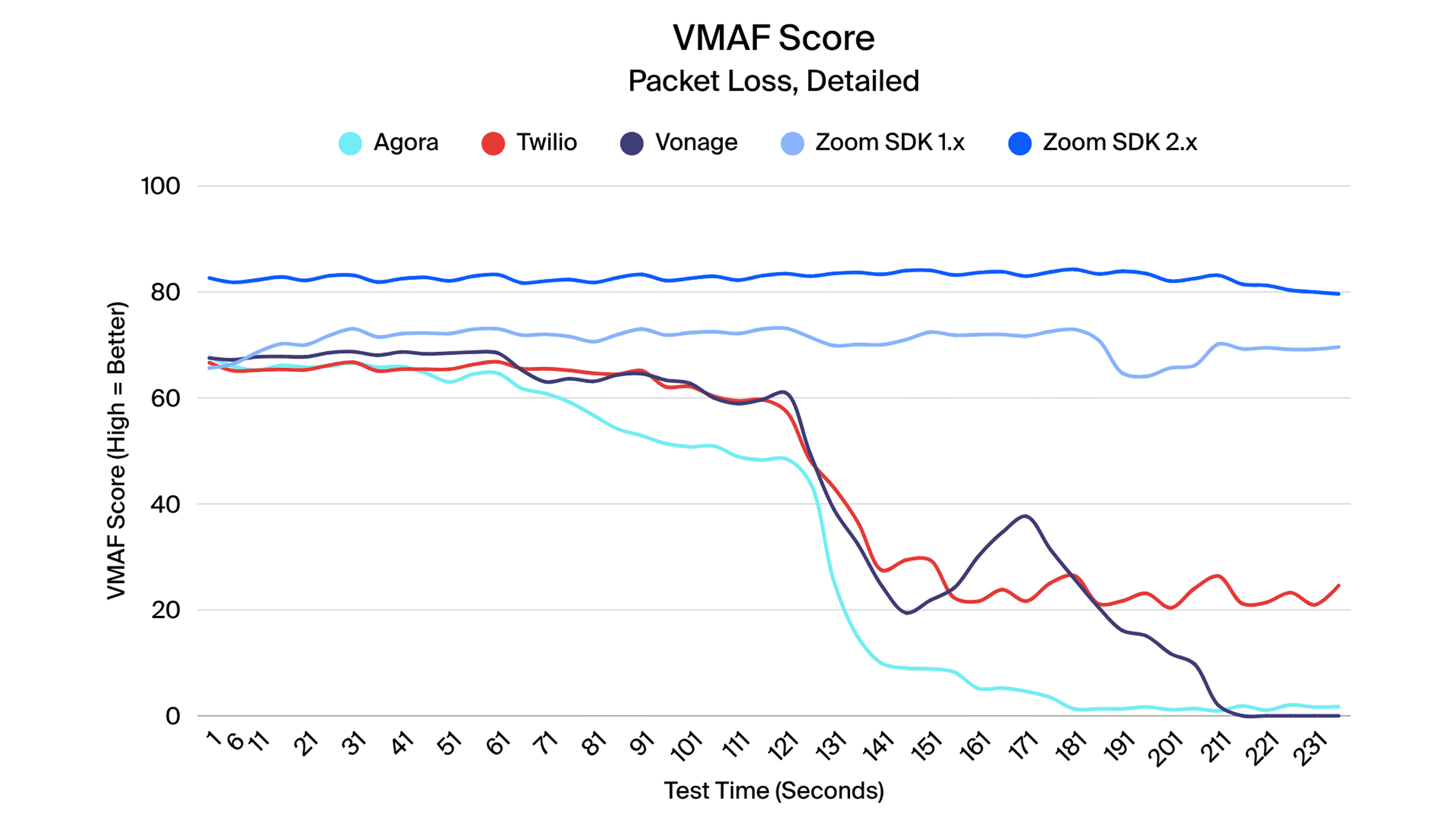

- Video quality consistency

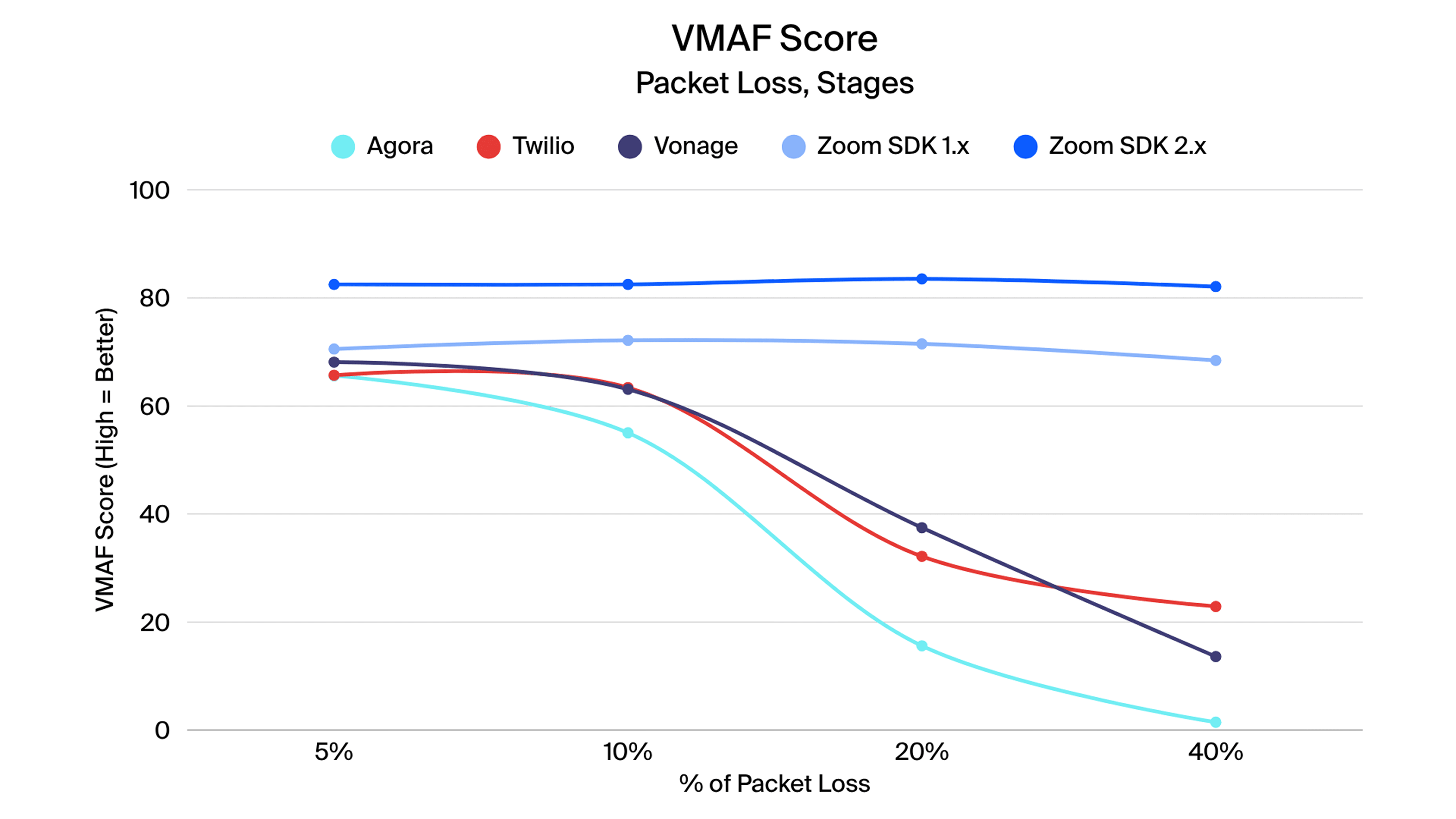

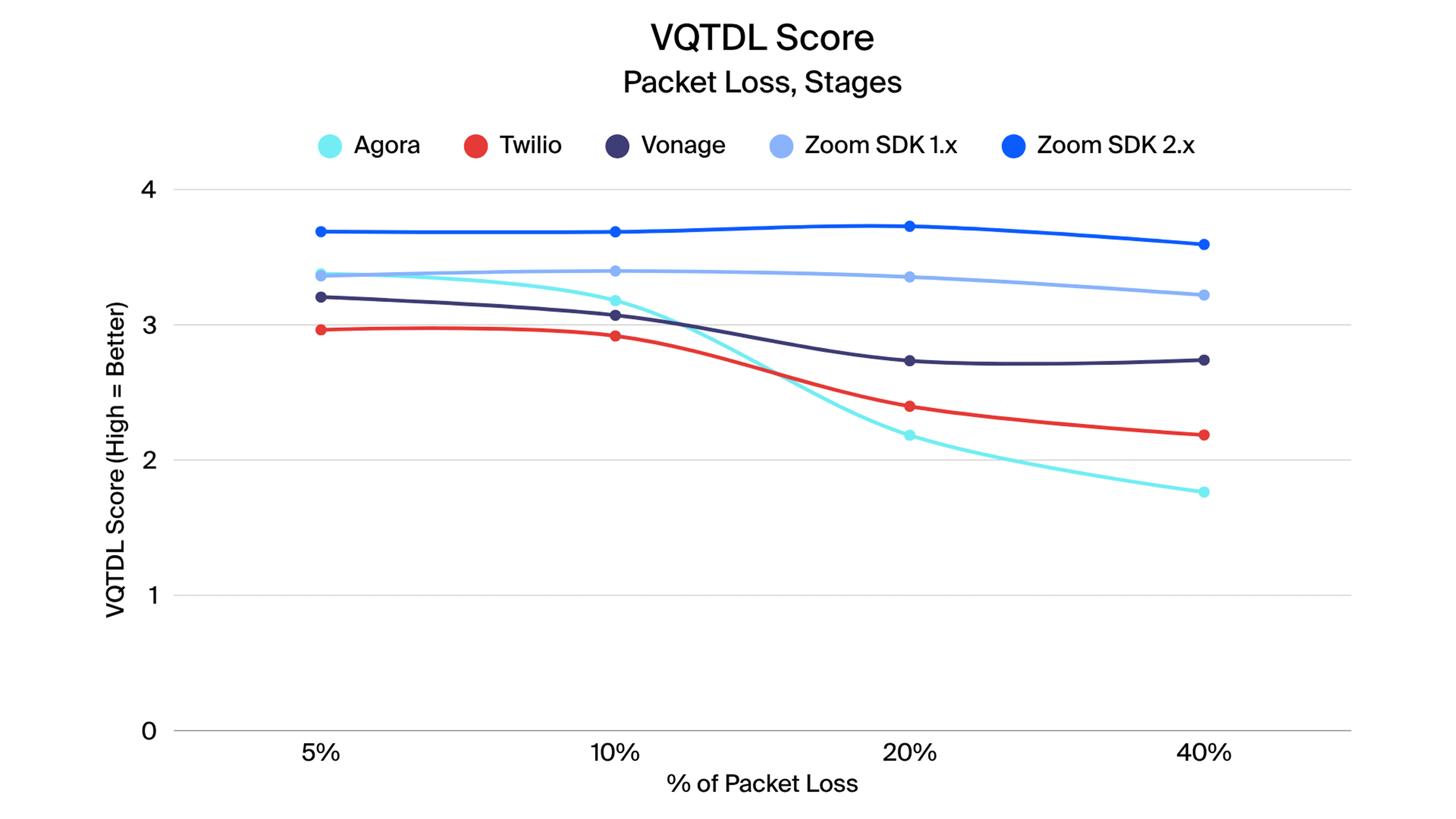

Zoom held video quality steady across conditions, avoiding the sharp VMAF drops seen in competing SDKs.- Stable VMAF and VQTDL values even under bandwidth limits and high packet loss

- Up to 42% better video quality in 4-user scenarios compared to competitors

- Up to 8x more frames per second than competitive platforms in 4-user scenarios

- 1.X2.X

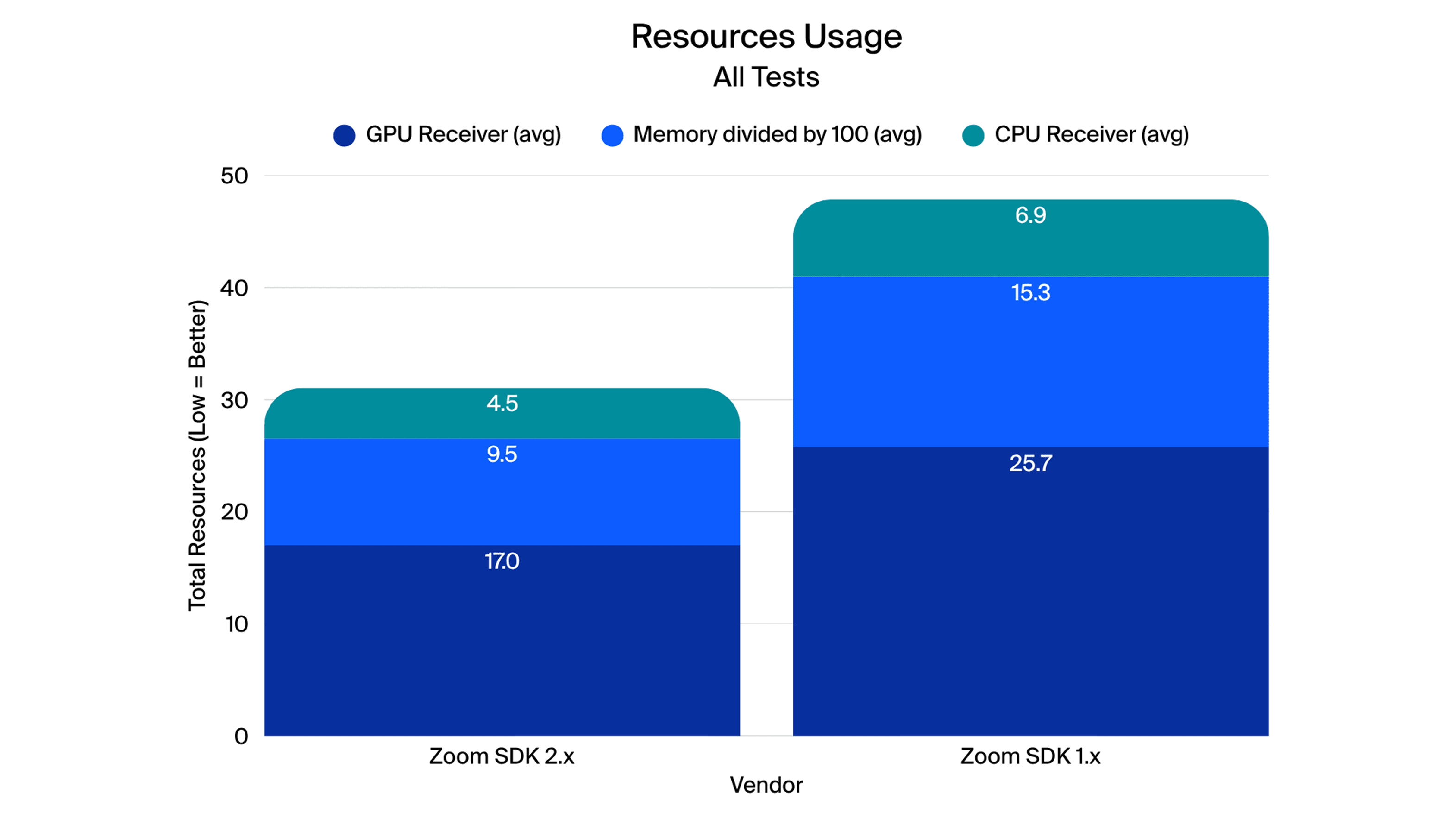

- Resource utilization

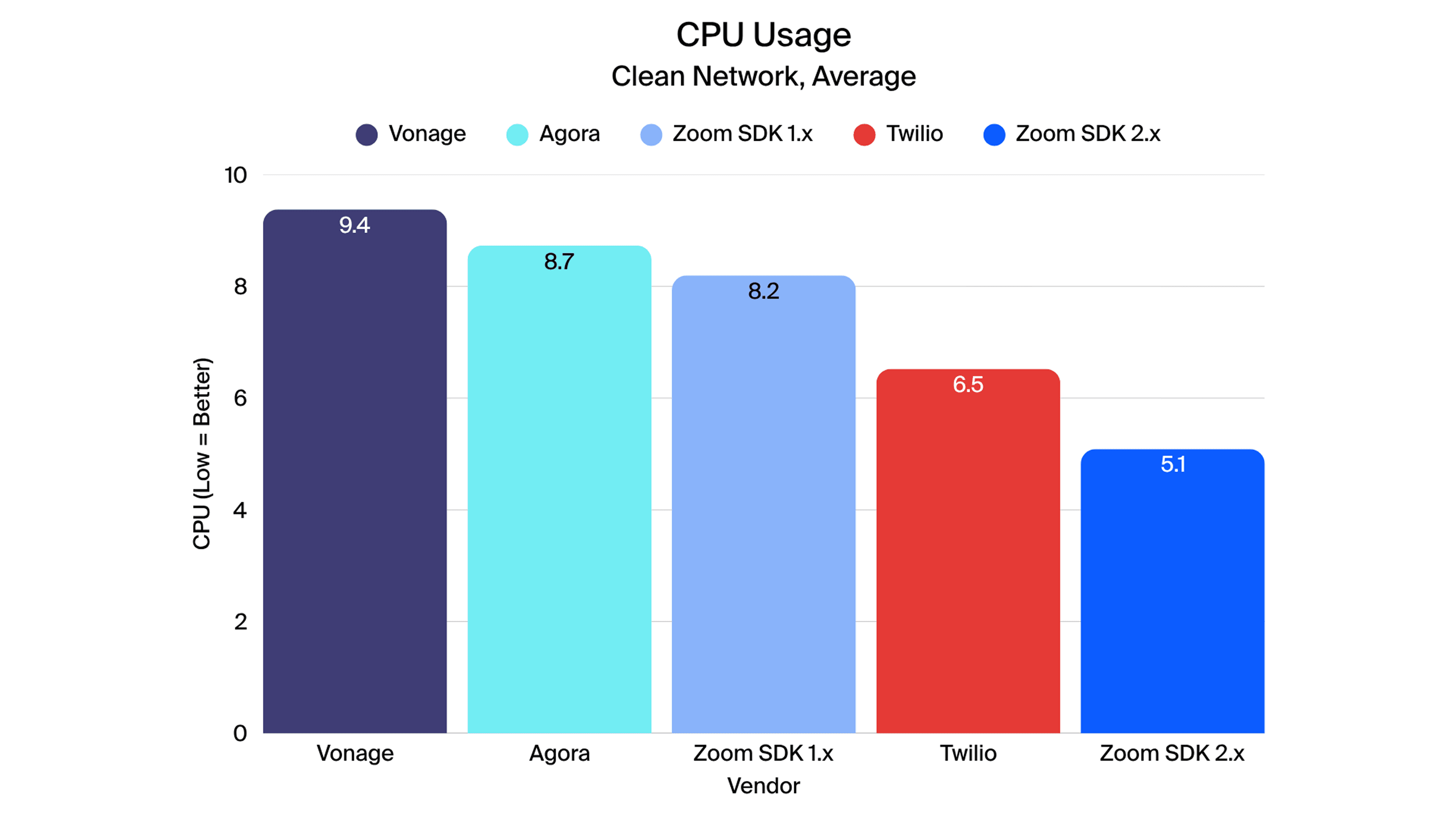

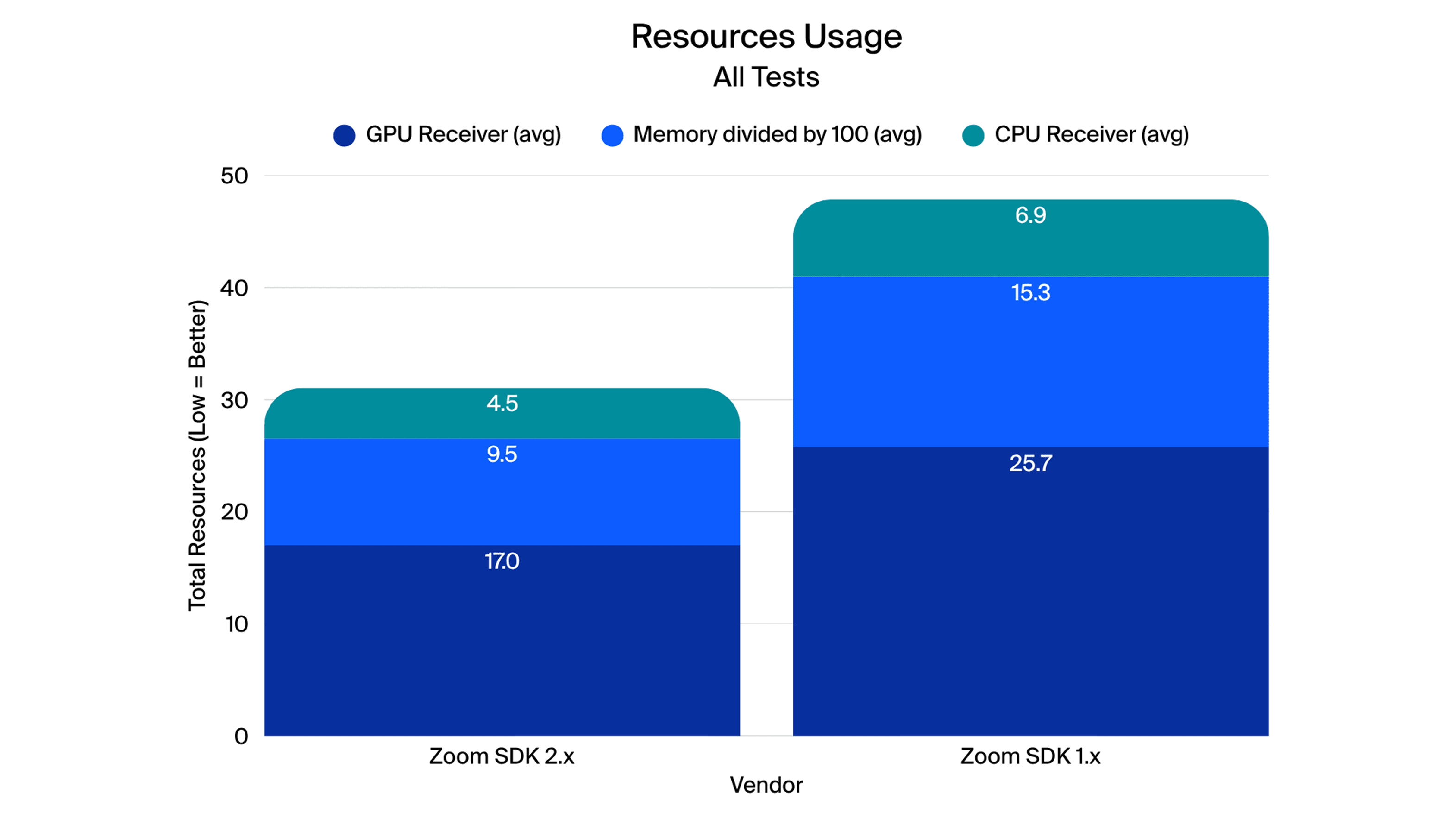

Zoom 2.X uses significantly fewer system resources than Zoom 1.X.- Overall CPU and memory usage reduced by roughly 40% compared to 1.X

- Lower resource consumption helps prevent device slowdowns or instability in real-world applications

- Provides developers a more efficient and scalable SDK for embedding real-time communications

- Performance Under Packet Loss

Packet loss testing (5% → 40%) revealed strong resilience from Zoom SDKs, particularly in audio quality and video consistency.- Audio quality (POLQA)

- Zoom maintained the highest POLQA scores across both macOS and Windows platforms, outperforming all tested competitors at 10–40% packet loss.

- Other vendors (Agora, Twilio, Vonage) experienced steep declines, often falling below the ~3.5 threshold where speech clarity degrades.

- Video quality (VMAF, VQTDL)

- Zoom preserved steady quality scores, with minimal drop even as packet loss increased.

- Competing SDKs showed significant degradation, producing noticeable artifacts and blurriness.

- Video fluidity (frame rate and freezes)

- Zoom 2.X showed fewer major freezes than Zoom 1.X, with Agora performing best at extreme loss.

- At 20% packet loss, Zoom 2.X had some framerate dips but remained within fluid experience thresholds (>14 fps).

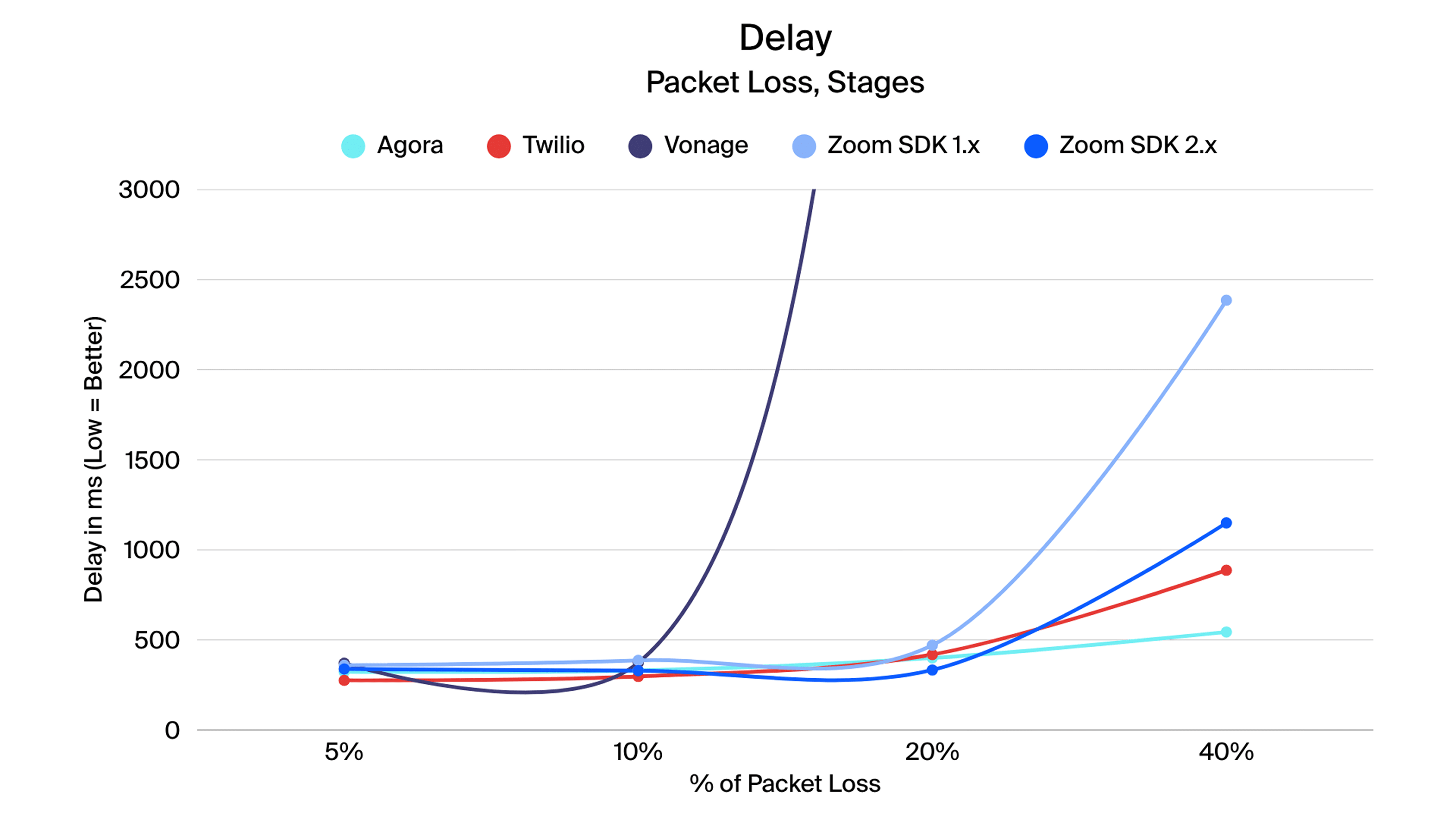

- Video delay

- Zoom 2.X and Agora minimized video delay, helping maintain A/V sync.

- Zoom 1.X was more affected but still competitive compared to Vonage.

- Audio quality (POLQA)

- Baseline (unlimited) conditions

Under unconstrained network conditions, most SDKs performed at acceptable levels, but Zoom stood out in maintaining both efficiency and quality.- Audio

- Zoom consistently delivered high POLQA scores with minimal delay.

- Competitors were generally close in unlimited conditions, but Zoom retained a margin of advantag

- Video quality and delay

- Zoom SDKs held top-tier VMAF and VQTDL scores, with smooth framerates and negligible freezes.

- Competitors like Agora and LiveKit performed well at baseline, but others showed more variability.

- Resource usage

- CPU and GPU utilization for Zoom SDKs remained within reasonable ranges, showing no excessive overhead.

- Zoom SDK 2.X shows a strong improvement over the 1.X version, leading in low CPU and Memory usage

- Some competitors (like Vonage) showed higher CPU spikes under load.

- Audio

Summary of detailed findings

- Zoom led in audio quality across virtually all conditions, particularly under packet loss where other vendors faltered.

- Zoom 2.X showed marked improvements in stability over 1.X, with fewer freezes and more consistent quality.

- Bandwidth recovery remains challenging for all vendors, but Zoom generally degraded more gracefully than peers.

- Competitors like Agora occasionally matched Zoom in isolated scenarios (e.g., minimizing freezes or delay), but Zoom consistently delivered a better balance of quality and stability.

The testing confirms that SDK quality is not equal across vendors. While most platforms deliver acceptable performance in unconstrained environments, differences become clear under stress conditions. Across packet loss, bandwidth limitations, and multi-user layouts, Zoom’s SDKs consistently provided a more resilient and stable experience.

- Audio strength: Zoom delivered the clearest, most intelligible speech under adverse conditions of the vendors tested, maintaining POLQA scores well above the degradation threshold where other vendors struggled.

- Video consistency: Zoom preserved quality and smoothness more effectively, avoiding the steep drops in VMAF and VQTDL scores that competitors experienced.

- Network resilience: Zoom sustained higher, steadier bitrates, which translated into fewer freezes and less visible quality loss, particularly with the improvements seen in Zoom 2.X over 1.X.

No SDK was immune to challenges at extreme packet loss or severe bandwidth constraints. However, Zoom distinguished itself by striking the best balance between maintaining quality and minimizing disruption. For developers embedding communications into their applications, these results suggest that Zoom’s SDKs for Web offer a more dependable foundation for delivering consistent, real-world user experiences.